In the next articles from you, is there any chance of switching exclusively to Python? Because the amount of pre-installed packages and environments will soon start to grow exponentially

interesting about stacking, thanks :)

In the following articles from you, is there any chance of switching exclusively to Python? Because the volume of pre-installed packages and environments will soon start growing exponentially

about stacking is interesting, thanks :)

And now and in the future all calculations in R but using Python packages (modules). There are a lot of them.

And now and in the future all calculations in R but using Python packages (modules). There are a lot of them.

OK, I'll try to forge it :)

Greetings azzeddine,

Keras is designed for humans in the sense that a minimal amount of code is needed to build structurally complex models. Of course we can and should use these models in EAs. This is just a solved and worked out issue. Otherwise, why do we need these exercises?

Of course we can build models that will track and analyse the mood in Twitter text messages or in the Blomberg news feed. This is a separate area that I have not tested and frankly speaking I do not believe in it very much. But there are a lot of examples on this topic on the Internet.

You should start with a simple one.

Good luck

Hello Vladimir, thank you for your articles. I am a programmer myself by profession, mainly .NET, c#. I wrote an Expert Advisor based on your articles only for mt5 terminal. I use Keras with GPU support, saves time and nerves for training models, R and mt5 linked through R.Net (I wrote a library dll, I can post it on github). Tried also H2O, works slowly, GPU support only under linux, not suitable, I work under vindows. Glad you too, switched to Keras in your articles. The Expert Advisor showed good results for EURUSD 15M, the data sample was taken from 2003 to 2017 (training, testing), I used only three predictors v.fatl, v.rbci, v.ftlm, more perdictors, the results are worse, neural network two hidden layers dense 1000 neurons in each (a lot of neurons is not necessary, I took 10 in each hidden layer, also gave good results). The Expert Advisor is almost the same as yours, with small modifications, stop loss 500, take profit 100, I open no more than 10 positions, well, and close all the positions Buy, if there are new positions Cell, and vice versa, everything is simple, the rest of the code is like yours. Well, all this code is only for EURUSD, for other quotes maybe everything should be done differently.

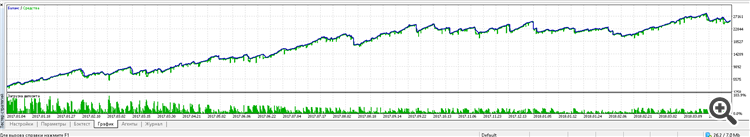

Here is the result of the work of the Expert Advisor from 2017 to now:

I put the Expert Advisor on a cent account, testing for 2 weeks, so far it works in plus. A lot of time was spent on searching for a neural network model and testing it, trying different variants of Dense, LSTM, CONV networks, I stopped at a simple Dense. Also the selection of different predictors, your DigFiltr + all kinds of indicators, your predictors showed the best result, without any additional indicators. I think this is not the limit, you can make a much better EA, I will now try to apply the ensembles from your last 2 articles. I am looking forward to your next publications, if you want, I will also inform you about my successes.

We will build neural networks using the keras/TensorFlow package from Python.

What's wrong with R

keras: R Interface to 'Keras'

kerasR: R Interface to the Keras Deep Learning Library

- cran.r-project.org

Hello Vladimir, thank you for your articles. I am a programmer myself by profession, mainly .NET, c#. I wrote an Expert Advisor based on your articles only for mt5 terminal. I use Keras with GPU support, saves time and nerves for training models, R and mt5 linked through R.Net (I wrote a library dll, I can post it on github). Tried also H2O, works slowly, GPU support only under linux, not suitable, I work under vindows. Glad you too, switched to Keras in your articles. The Expert Advisor showed good results for EURUSD 15M, the data sample was taken from 2003 to 2017 (training, testing), I used only three predictors v.fatl, v.rbci, v.ftlm, more perdictors, the results are worse, neural network two hidden layers dense 1000 neurons in each (a lot of neurons is not necessary, I took 10 in each hidden layer, also gave good results). The Expert Advisor is almost the same as yours, with small modifications, stop loss 500, take profit 100, I open no more than 10 positions, well, and close all the positions Buy, if there are new positions Cell, and vice versa, everything is simple, the rest of the code is like yours. Well, all this code is only for EURUSD, for other quotes it may be necessary to do everything differently.

Here is the result of the work of the Expert Advisor from 2017 to now:

I put the Expert Advisor on a cent account, testing for 2 weeks, so far it works in plus. A lot of time was spent on searching for a neural network model and testing it, trying different variants of Dense, LSTM, CONV networks, I stopped at a simple Dense. Also the selection of different predictors, your DigFiltr + all kinds of indicators, your predictors showed the best result, without any additional indicators. I think this is not the limit, you can make a much better EA, I will now try to apply the ensembles from your last 2 articles. I am looking forward to your next publications, if you want, I will also inform you about my successes.

Good day Eugene.

I am glad that the materials of my articles help you. A few questions.

1. As far as I understand you use keras for R? Do you write scripts in R or Python?

2. The gateway library we use did not work for you? See here.

3. Please post your version of the library and an example of some script. It is interesting to see, try, compare.

4. Of course I and I think many enthusiasts will be very interested to follow your experiments.

5. I have long thought to open a separate RUSERGroop thread on which it would be possible to discuss specific questions on language, models without flooding. Dreams...

Appearance of API to Python (reticulate) opens almost unlimited possibilities for building systems of any complexity. I have plans to train with reinforcement learning in tandem with neural networks in the near future.

Write about your successes, ask questions.

Good luck

We will build neural networks using the keras/TensorFlow package from Python

What's wrong with R

keras: R Interface to 'Keras'

kerasR: R Interface to the Keras Deep Learning Library.

Greetings CC.

That's the way I use it. keras for R. I tried KerasR, but due to the fact that the tensorflow backend is developing very fast (already version 1.8), it is more reliable to use the package developed and maintained by the Rstudio team.

Good luck

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

New article Deep Neural Networks (Part VII). Ensemble of neural networks: stacking has been published:

We continue to build ensembles. This time, the bagging ensemble created earlier will be supplemented with a trainable combiner — a deep neural network. One neural network combines the 7 best ensemble outputs after pruning. The second one takes all 500 outputs of the ensemble as input, prunes and combines them. The neural networks will be built using the keras/TensorFlow package for Python. The features of the package will be briefly considered. Testing will be performed and the classification quality of bagging and stacking ensembles will be compared.

Let us plot the history of training:

Fig. 11. The history of the DNN500 neural network training

To improve the classification quality, numerous hyperparameters can be modified: neuron initialization method, regularization of activation of the neurons and their weights, etc. The results obtained with almost intuitively selected parameters have a promising quality but also a disappointing cap. Without optimization, it was not possible to raise Accuracy above 0.82. Conclusion: it is necessary to optimize the hyperparameters of the neural network. In the previous articles, we experimented with Bayesian optimization. It can be applied here as well, but it is a separate difficult topic.

Author: Vladimir Perervenko