Building Multi-Head Self-Attention in Python

We have already implemented the Multi-Head Self-Attention algorithm using MQL5 and have even added the ability to perform multi-threaded calculations using OpenCL. Now let's look at an option for implementing such an algorithm in Python using the Keras library for TensorFlow. We had to deal with this library when creating previous models. Indeed, up to this point, we have been using only pre-built neural layers offered by the library, and with their help, we constructed linear models.

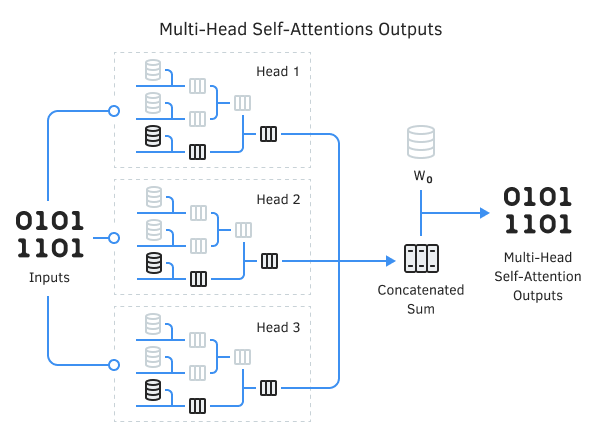

The Multi-Head Self-Attention model cannot be called linear. The parallel work of several heads of attention in itself is a rejection of the linearity of the model. In the Self-Attention algorithm itself, the source data simultaneously goes in four directions.

Therefore, to build a Multi-Head Self-Attention model, we will consider another functionality offered by this library, which is creating custom neural layers.

A layer is a callable object that takes one or more tensors as input and outputs one or more tensors. It includes computation and status.

All neural layers in the Keras library represent classes inherited from the tf.keras.layers.Layer base class. Therefore, when creating a new custom neural layer, we will also inherit from the specified base class.

The base class provides the following parameters:

- trainable — flag that indicates the need to train the parameters of the neural layer

- name — layer name

- dtype — type of layer results and weighting factors

- dynamic — flag that indicates that the layer cannot be used to create a graph of static calculations

tf.keras.layers.Layer(

|

Also, the library architecture defines a minimum set of methods for each layer:

- __init__ — layer initialization method

- call — calculation method (feed-forward pass)

In the initialization method, we define the custom attributes of the layer and create weight matrices, the structure of which does not depend on the format and structure of the input data. However, when solving practical problems, we often do not know the structure of the input data, and as a result, we cannot create weight matrices without understanding the dimensionality of the input data. In such cases, the initialization of weight matrices and other objects is transferred to the build(self, input_shape) method. This method is called once, during the first call of the call method.

The call method describes the forward-pass operations that must be performed with the initial data. The results of the operations are returned as one or more tensors. For layers used in linear models, there is a restriction on the result in the form of a single tensor.

Each neural layer has the following attributes (a list of the most commonly used attributes is provided):

- name — layer name

- dtype — type of weighting factors

- trainable_weights — list of variables to be trained

- non_trainable_weights — list of non-trainable variables

- weights — combines lists of trainable and non-trainable variables

- trainable — logical flag that indicates the need to train layer parameters

- activity_regularizer — additional regularization function for the output of the neural layer.

The advantages of this implementation are obvious: we are not creating backpropagation methods. All functionality is implemented by the library. We just need to correctly describe the logic of the feed-forward pass in the call method.

This approach makes it possible to create rather complex architectural solutions. Moreover, the created layer may contain other nested neural layers. At the same time, the parameters of the internal neural layers are included in the list of parameters of the external neural layer.