- 5.1.2.1 Self-Attention feed-forward method

- 5.1.2.2 Self-Attention backpropagation methods

- 5.1.2.3 File operations

5.Self-Attention backpropagation methods

In the previous section, we discussed the feed-forward method in the Encoder block of the Transformer architectural solution. This block includes a Self-Attention mechanism, followed by processing by two fully connected neural layers. The peculiarity of the Self-Attention mechanism lies in determining the dependencies between elements of the sequence. Moreover, each element of the sequence is represented as a vector of properties of a fixed length. Each sequence element within one neural layer is processed by an Encoder block with one set of weighting factors. This allowed us to use previously developed convolutional layers to solve a number of problems. The organization of a forward pass is a very important part of the algorithm for the operation of neural networks. We use it both when training our neural network models, and during practical application. But neural network training is impossible without going back. So now we'll look at organizing the backward pass in our attention mechanism class.

Just to remind you, we have created our own class as a successor to the neural layer base class. Several methods are responsible for organizing the backward pass:

- CNeuronBase::CalcOutputGradient: method for calculating the error gradient of the result layer.

- CNeuronBase::CalcHiddenGradient: method for calculating the error gradient through a hidden layer.

- CNeuronBase::CalcDeltaWeights: method for calculating the error gradient to the level of the weight matrix.

- CNeuronBase::UpdateWeights: method for updating weights.

All methods were made virtual to allow overriding in descendant classes. In our class, we're not going to override only the first method.

We will work on the methods in accordance with the logic of the backward propagation of error gradient method. We will be the first to redefine the error gradient calculation method via the hidden CNeuronAttention::CalcHiddenGradient layer. Of the three redefined methods, this one is probably the most difficult to understand and organize. After all, it is in this method that we will need to repeat the entire path of the feed-forward pass, but in reverse order. At the same time, we will have to find derivatives of all operations used in the feed-forward pass.

In the method parameters, we get a pointer to an object in the previous layer, in whose buffer we will save the result of operations. Next, in the body of the method, we organize a block of checks on the relevance of pointers to objects. Here, I decided not to dwell on checking all objects but only checked those objects that are not verified when calling methods of internal classes. This decision was made in an attempt to avoid redundant validity checks of objects during the execution of method operations.

bool CNeuronAttention::CalcHiddenGradient(CNeuronBase *prevLayer)

|

This is followed by the most interesting part in which the error gradient is propagated in the reverse order of the feed-forward algorithm. Let's look at the forward pass algorithm. It ends with the normalization of results, which is carried out using formulas.

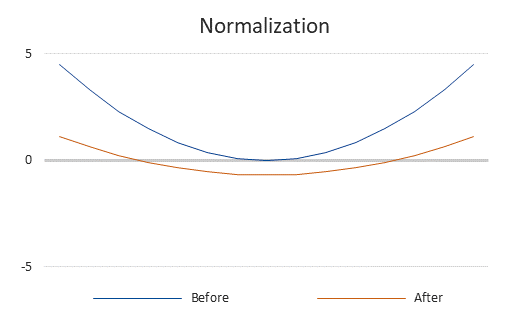

What is the normalization process? This is the process of changing the statistical variables in a sampling and bringing it closer to some specified parameters. Most often this is the mean and standard deviation, as in our case. We equate the mean value to zero and reduce the standard deviation to one. As a result of this operation, the function graph is shifted and scaled, as shown in the figure.

Effect of normalization on the function graph

In essence, as part of the Self-Attention algorithm, the process of data normalization is used as a function of activating the neural layer. However, unlike the latter, it does not change the data structure.

But we are not going to dig into the details of calculating the derivative of the complex data normalization function now. We have implemented the process of correcting the error gradient as a separate method.

//--- adjust the gradient for normalization

|

Next, we can use the FeedForward methods of our internal block layers and draw an error gradient to the internal layer for storing the results of the attention block.

//--- propagate a gradient through the layers of the Feed Forward block

|

In the feed-forward method, before normalizing the results layer, we added the values of two buffers (the results of the FeedForward and Self-Attention blocks). Therefore, the error gradient should also be propagated along both branches of the algorithm. So, let's add the two gradient buffers together. To facilitate access to the buffer of the internal Self-Attention results storage layer, we create a local pointer to objects.

CBufferType *attention_grad = m_cAttentionOut.GetGradients();

|

Let's adjust the error gradient by the standard deviation.

//--- adjust the gradient for normalization

|

After adding the two error gradient tensors, we need to distribute the error gradient between the internal layers m_cQuerys, m_cKeys, and m_cValues. When we passed forward, we fully recreated the data flow algorithm block from the specified neural layers to the Self-Attention results buffer. Therefore, we will also have to create a backpropagation process. As always, here we will create a branching of the algorithm depending on the computing device. We start with considering the process of algorithm creation using standard MQL5 tools and will get back to the implementation of the multi-threaded computing mechanism using OpenCL a little later.

//--- branching the algorithm by the computing device

|

At the beginning of the MQL5 block, we will create two matrices for storing intermediate data: values and gradients.

We will be the first to transfer the error gradient to the neural layer of m_CValues values. It was the values of the results buffer of this neural layer that we multiplied by the dependency coefficients of the Score matrix to determine the results of the Self-Attention block during a direct pass. Now we are performing the reverse operation. As we have already said, the derivative of the multiplication operation is equal to the second factor. In our case, these are the Score matrix coefficients.

The data tensors have the following dimensions:

- The Score matrix is square with a side equal to the number of elements in the sequence.

- The m_CValues and m_CattentionOut buffers of neural layers have the number of rows equal to the number of sequence elements and the number of elements in each row equal to the size of the vector describing one element of the sequence.

To prevent potential mismatches in matrix sizes, we will reshape the error gradient matrix to the required format.

if(attention_grad.GetData(gradients, false) < (int)m_cOutputs.Total())

|

Each sequence element from m_CValues affects all elements of the m_CattentionOut sequence with the corresponding coefficient from the m_cScores matrix.

To organize the process of propagating the error gradient to the m_CValues neural layer buffer, we need to multiply the transposed m_cScores matrix of dependence coefficients by the gradients error matrix.

//--- gradient propagation to Values

|

Next, we'll propagate the error gradients to m_cQuerys and m_cKeys. Both neural layers participated in creating the m_cScores matrix of dependence coefficients. Therefore, we first need to determine the error gradient on the matrix of dependence coefficients.

During the feed-forward pass, to obtain the Self-Attention result, we multiplied the m_cScores matrix by the results tensor of the neural layer m_CValues. We have already determined the error gradient for the neural layer. Now we need to propagate the error gradient along the second branch of the algorithm and distribute it to the values of the dependency coefficient matrix. Therefore, we will need to multiply the error gradient by the transposed results buffer of the m_cValues neural layer.

gradients = gradients.MatMul(values.Transpose()); |

Let me remind you that during a direct pass, the matrix values were normalized by the Softmax function as part of Query queries. The complexity of calculating this function and its derivative lies in the need to compute the entire normalization array at once. Unlike other functions, the derivative of a vector of values will be a matrix. This is due to the nature of the Softmax feature itself. A change in one element of the source data vector leads to a change in the entire sequence of the normalized result because the sum of all elements of the result vector is always equal to one. Therefore, in order to distribute the error gradient correctly, we need to work in the context of queries Query.

The mathematical formula for the derivative of the Softmax function is:

We'll use its matrix representation:

where E is a single square matrix with a size equal to the number of elements in the sequence.

The implementation of this approach is described below. In a loop, we determine the derivative of each individual row of the dependency coefficient matrix. After multiplying the resulting matrix by the gradient vector of the corresponding row, we get a vector of corrected error gradients. Let's not forget that before normalizing the dependency coefficient matrix Score, we divided its values by the square root of the dimension of the vector describing one element in the Key tensor. Accordingly, we will repeat this procedure for the error gradient as well. The logic of this operation is simple: dividing by a constant is equivalent to multiplying by the reciprocal of that constant, and the derivative of a multiplication operation is equal to its second multiplier.

The result of the above operations will replace the analyzed row of the gradient matrix.

for(int r = 0; r < m_iUnits; r++)

|

After obtaining an adjusted error gradient for each individual dependency coefficient, we distribute it to the corresponding Query and Key tensor vectors. To this end, we will multiply the matrix of adjusted gradients of dependence coefficients by the opposite matrix.

m_cQuerys.GetGradients().m_mMatrix =

|

else // OpenCL block

|

This completes the block for branching the algorithm by the computing device. In the OpenCL block, we will leave the return of a negative result for now and will come back to it a little later. Now let's move on with our error backpropagation algorithm. After obtaining the error gradient at the output of the internal neural layers, we need to propagate it back to the previous layer.

As you remember, in the feed-forward pass, the source data is used in four branches of the algorithm:

- At the input of the internal m_Cquerys layer

- At the input of the internal m_CKeys layer

- At the input of the internal m_CValues layer

- Added to the output of the Self-Attention block before the layer is normalized

Therefore, in the buffer for the error gradients of the previous layer, we should accumulate the error gradient from all 4 directions. The operating algorithm is similar to the previously constructed process of adding buffers in a recurrent LSTM block. However, we will not create a separate buffer to accumulate data; we will use the existing one instead. The error gradient at the output of the Self-Attention block has already been calculated in the neural layer buffer m_CattentionOut. This is where we will accumulate intermediate error gradients.

We will alternately call the method of transferring the gradient to the previous CalcHiddenGradient layer for each inner layer, giving it a pointer to the previous neural layer. After successfully executing the method, we will add the obtained result to the previously accumulated error gradient in the gradient buffer of the m_CattentionOut neural layer.

//--- transfer the error gradient to the previous layer

|

Note that in the first two cases, we recorded the sum of two error gradient buffers into the internal neural layer buffer. In the last case, we saved the sum of the two buffers into the buffer for the error gradients of the previous neural layer. The reason is that the CalcHiddenGradient method of the internal neural layer overwrites the values in the gradient buffer of the neural layer specified in the parameters. So, we needed to accumulate intermediate gradients in a different buffer. However, at the end of the method, we need to propagate the error gradient to the previous layer. Therefore, during the last summation of the buffers, we immediately write the sum to the buffer of the previous neural layer, thereby avoiding unnecessary copying of data.

A method for correcting the error gradient for the NormlizeBufferGradient data normalization process was announced above. What is the normalization process and why is it difficult to determine the derivative of a function? At first glance, we subtract the arithmetic mean from each element of the normalized array and divide the resulting difference by the standard deviation.

If we were subtracting and dividing by constants, there would be no difficulties. When a constant is subtracted, the derivative does not change.

The derivative of dividing by a constant is equal to the ratio of 1 to the constant.

But the problem is that both the average ones are functions. When changing any single value in the input tensor, the values of the means change, and consequently, all the values in the output tensor of the normalization block are affected. This makes it much more difficult to calculate the derivative of the entire function. We will not present them now and will instead use the ready-made result.

Let's implement the above formulas in code using MQL5 matrix operations. In parameters, the method receives pointers to 3 data buffers:

- output — buffer with the results of normalizing feed-forward data

- gradient — error gradient buffer. It is used both for obtaining initial data and for recording results

- std — standard deviation buffer calculated during a forward pass.

As you can see, the parameters do not include the data buffer before normalization and the value of the arithmetic mean calculated during the forward pass. We simply replaced the difference between the non-normalized data and the arithmetic mean with the product of the normalized data and the standard deviation.

Of course, we don't expect zero standard deviation. Let's add a check to prevent a critical error of division by zero.

bool CNeuronAttention::NormlizeBufferGradient(CBufferType *output,

|

In addition to the method of distributing the gradient through a hidden layer, the algorithm for the backward distribution of the error gradient in all previously considered neural layers is usually represented by two more methods:

- CalcDeltaWeights — method for calculating the error gradient to the level of the weight matrix

- UpdateWeights — method for updating weights

The CNeuronAttention class under consideration will be no exception. We will also use it to redefine these two methods. Their algorithm is straightforward: we will simply call the methods of all internal neural layers of the same name one by one, while constantly checking the results of the operations.

bool CNeuronAttention::CalcDeltaWeights(CNeuronBase *prevLayer)

|

bool CNeuronAttention::UpdateWeights(int batch_size, TYPE learningRate,

|

In this way, we have implemented three methods that make up the backpropagation algorithm for our attention block.