- Building Dropout in MQL5

- Organizing multi-threaded operations in Dropout

- Implementing Dropout in Python

- Comparative testing of models with Dropout

Comparative testing of models with Dropout

Another stage of work with our library has been completed. We have studied the Dropout method, which combats the issue of feature co-adaptation and have built a class to implement this algorithm in our models. In the previous section, we assembled a Python script for the comparative testing of models using this method and without. Let's look at the results of such testing.

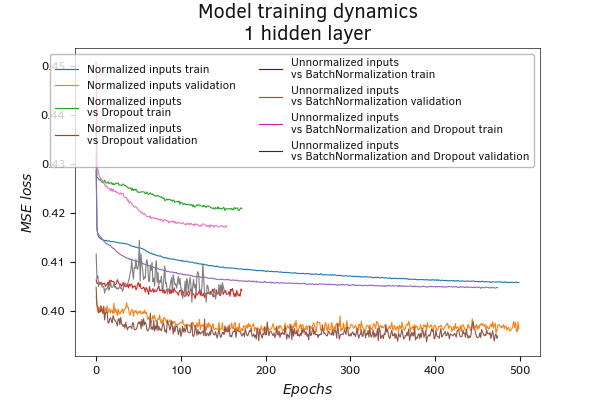

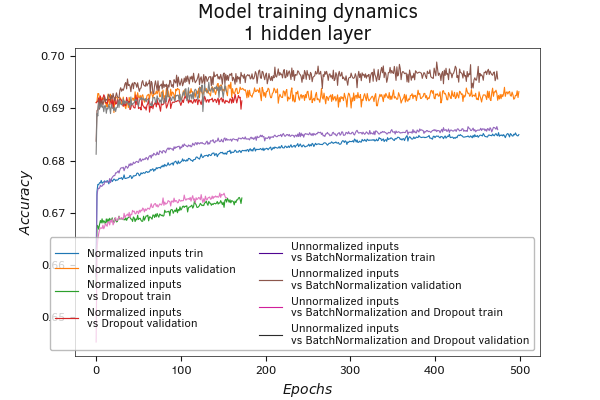

First, we look at the test training schedule for models with one hidden layer. The dynamics of the mean square error of the models using Dropout was worse than that of models without it. This applies to both the model trained on normalized data and the model using batch normalization layers for preprocessing the input data. You can see that both models using the Dropout layer worked synchronously. Their lines on the graph are practically overlapping, both during the training and validation phases.

Similar conclusions can be drawn when analyzing the dynamics of Accuracy metrics. However, unlike the MSE, accuracy values in the validation process are close to those of other models.

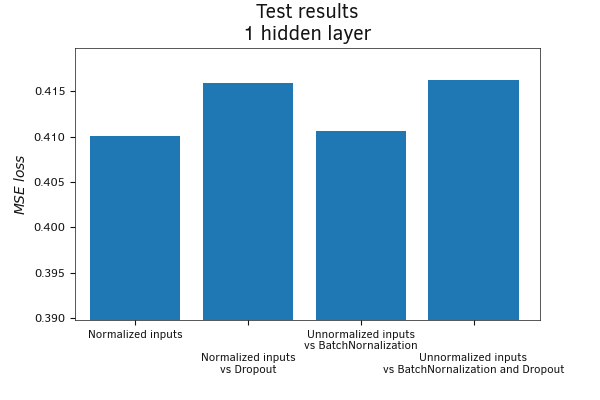

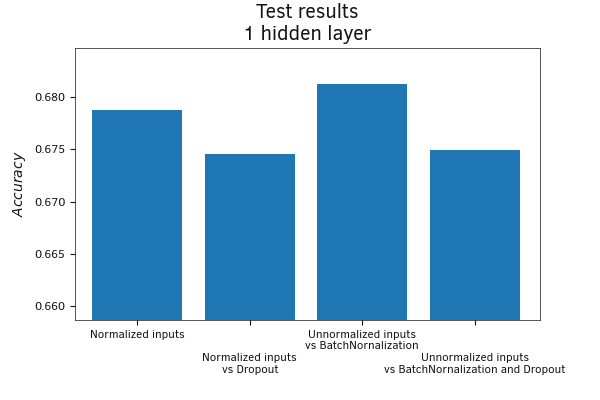

The evaluation of models on the test dataset also showed deterioration in model performance when using the Dropout layer, both for mean square error and for Accuracy. The reasons for such a phenomenon can only be speculated upon. One of the possible reasons can be attributed to the use of models that are too simple. The models didn't have too many neurons, and masking some of them reduces the capabilities of the model, which are already limited by the small number of neurons being used.

Comparative model testing with Dropout

Comparative model testing with Dropout

On the other hand, we chose uncorrelated variables at the data selection stage. Probably due to the small number of features being used and the absence of strong correlations between them, co-adaptation might not be highly developed in our models. As a result, the negative impact of using Dropout in terms of degrading the model performance may have outweighed the positive effects of the method.

Comparative model testing with Dropout (test sample)

Comparative model testing with Dropout (test sample)

That is just my guess. I do not have enough information to draw certain conclusions. Additional tests will be required. However, it is more the focus of scientific work, while our goal is the practical use of models. We conduct experiments with various architectural solutions and choose the best one for each specific task.

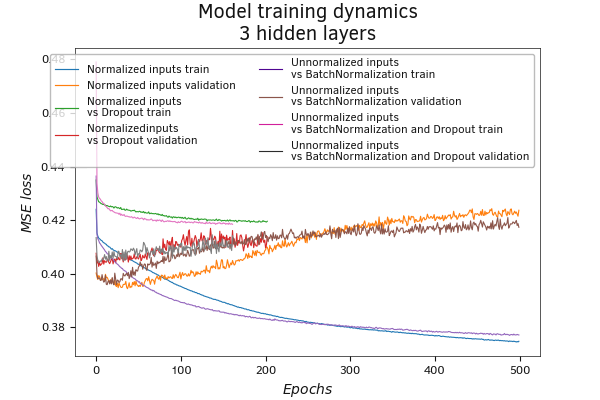

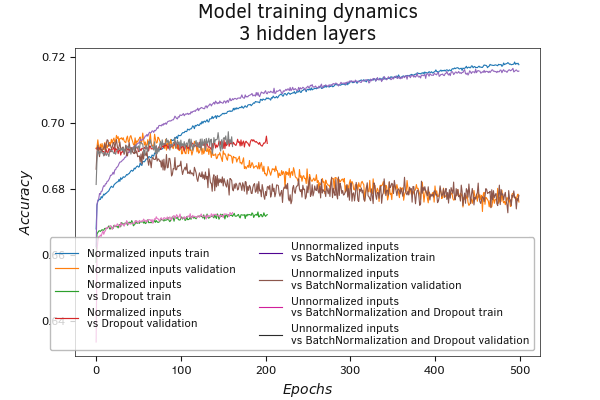

Comparative model testing with Dropout

The second test was performed using a Dropout layer before each fully connected layer in models with three hidden layers. Again, models with the Dropout layer performed worse than other models in the learning process. However, during the validation process, the situation tends to change somewhat. While models without the use of Dropout layers tend to decrease their performance on the validation stage with an increase in the number of training epochs, models using this technology slightly improve their positions or remain at a similar level.

This suggests that the use of a Dropout layer reduces the likelihood of model overfitting.

The graph showing the dynamics of Accuracy values during training confirms the conclusion made earlier. With the increasing number of model training epochs, without the use of the Dropout layer, there is a widening gap between the values in the training and validation phases. This indicates a retraining of the model. For models using Dropout technology, the gap is narrowing. This supports the earlier conclusion that the use of a Dropout layer reduces the tendency of the model to overfit.

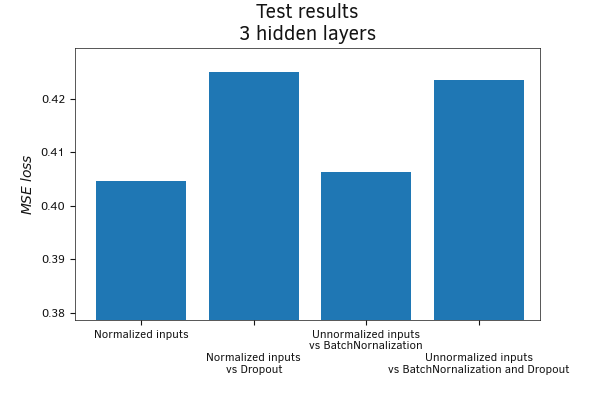

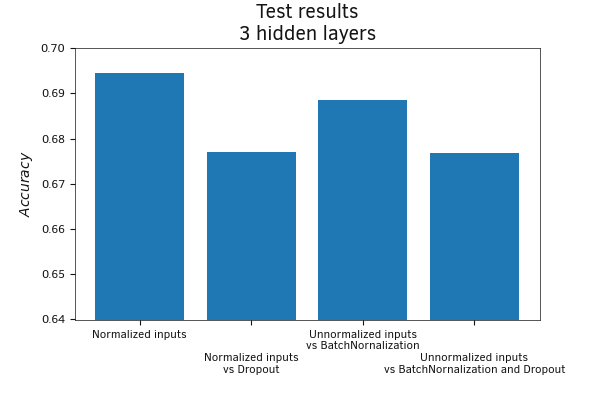

On the test dataset, models using the Dropout layer showed worse results in both metrics.

Comparative model testing with Dropout

Comparative model testing with Dropout (test sample)

Comparative model testing with Dropout (test sample)

In this section, we have run comparative model testing with Dropout technology and without it. The following conclusions can be drawn from the tests:

- The use of the Dropout technology helps reduce the risk of model overfitting.

- The effectiveness of the Dropout technology increases as the model size grows.