Discussing the article: "Neural Networks in Trading: Mask-Attention-Free Approach to Price Movement Forecasting"

Hi Dmitriy,

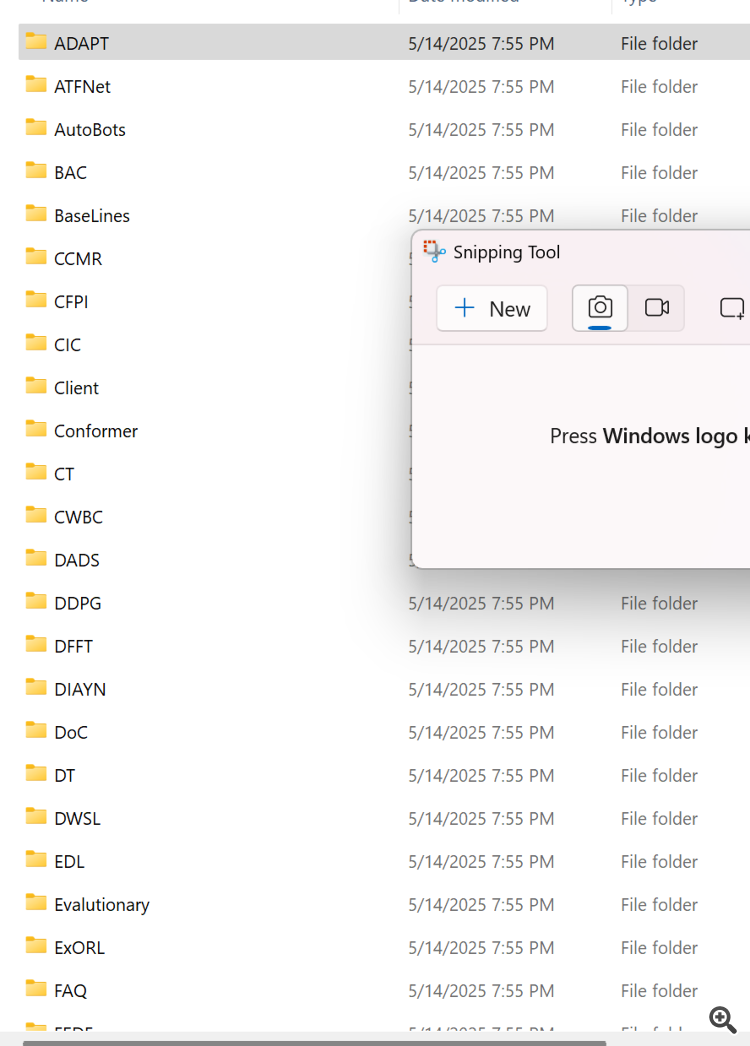

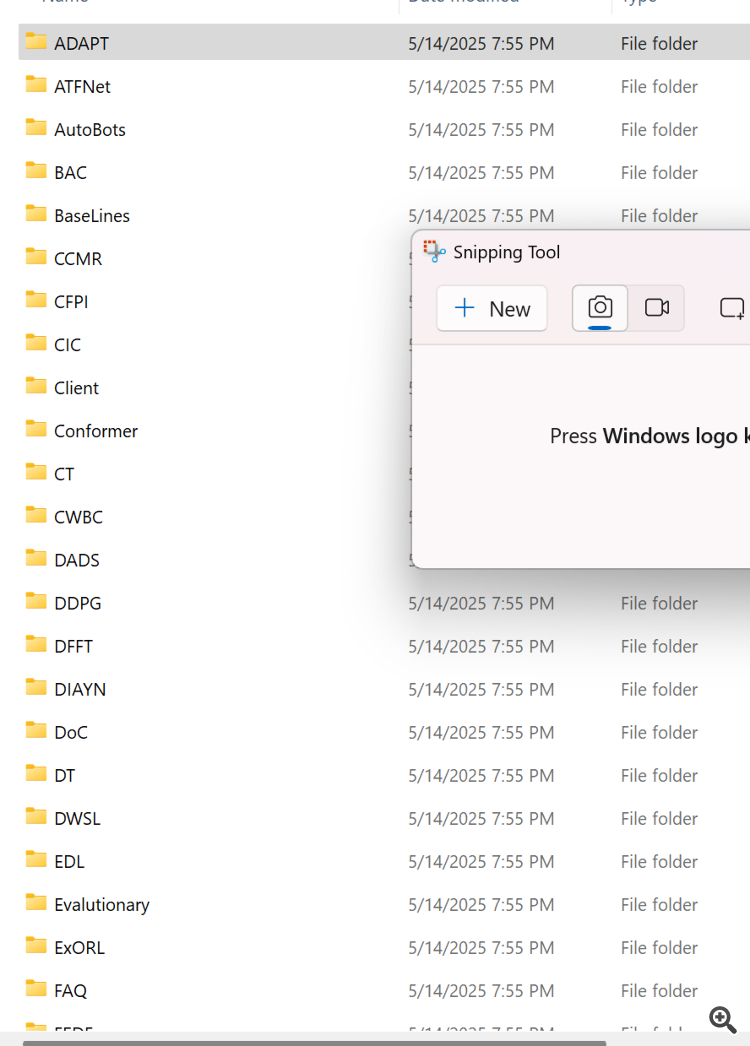

It sems like your zip files have been incorrectly made. I expected to see the source code listed in your box instead this is what the zip contained. It appears that each directory listed contains the files you have used in your various articles. Could you provide a description of each or better yet, append the article number to each directory as appropriate.

Thanks

CapeCoddah

Hi Dmitriy,

It sems like your zip files have been incorrectly made. I expected to see the source code listed in your box instead this is what the zip contained. It appears that each directory listed contains the files you have used in your various articles. Could you provide a description of each or better yet, append the article number to each directory as appropriate.

Thanks

CapeCoddah

Hi CapeCoddah,

The zip file contains files from all series. The OpenCL program saved in "MQL5\Experts\NeuroNet_DNG\NeuroNet.cl". The library with all classes you can find in "MQL5\Experts\NeuroNet_DNG\NeuroNet.mqh". And model and experts referenced this article are located in the directory "MQL5\Experts\MAFT\"

Regards,

Dmitriy.

Hi CapeCoddah,

The zip file contains files from all series. The OpenCL program saved in "MQL5\Experts\NeuroNet_DNG\NeuroNet.cl". The library with all classes you can find in "MQL5\Experts\NeuroNet_DNG\NeuroNet.mqh". And model and experts referenced this article are located in the directory "MQL5\Experts\MAFT\"

Regards,

Dmitriy.

Hi Dmitriy,

Thanks for the prompt response. I understand what you are saying but I think you misunderstood me. How do I associate the subdirectory names with their respecitive article, either by name or by article number from which can search to find the article.

Cheers

CapeCoddah

Hi Dmitriy,

Thanks for the prompt response. I understand what you are saying but I think you misunderstood me. How do I associate the subdirectory names with their respecitive article, either by name or by article number from which can search to find the article.

Cheers

CapeCoddah

By the name of the framework

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

Check out the new article: Neural Networks in Trading: Mask-Attention-Free Approach to Price Movement Forecasting.

The SPFormer algorithm represents a fully end-to-end pipeline that allows object queries to directly generate instance predictions. Using Transformer decoders, a fixed number of object queries aggregate global object information from the analyzed point cloud. Moreover, SPFormer leverages object masks to guide cross-attention, requiring queries to attend only to masked features. However, in the early stages of training, these masks are of low quality. This hampers performance in subsequent layers and increases the overall training complexity.

To address this, the authors of the MAFT method introduce an auxiliary center regression task to guide instance segmentation. Initially, global positions 𝒫 are selected from the raw point cloud, and global object features ℱ are extracted via a backbone network. These can be voxels or superpoints. In addition to the content queries 𝒬0c, the authors of MAFT introduces a fixed number of positional queries 𝒬0p, representing normalized object centers. While 𝒬0p is initialized randomly, 𝒬0c starts with zero values. The core objective is to allow the positional queries to guide the corresponding contextual queries in cross-attention, followed by iterative refinement of both query sets to predict object centers, classes, and masks.

Author: Dmitriy Gizlyk