Comparative testing of Attention models

We have done a lot of work while studying and implementing the Multi-Head Self-Attention algorithm. We even managed to implement it on several platforms. Earlier we created new classes only for our library in MQL5. This time we got acquainted with the possibility of creating custom neural layers in Python using the TensorFlow library. Now it's time to look at the results of our labor and evaluate the opportunities offered to us by the new technology.

As usual, we start testing with models created using standard MQL5 tools. We have already started this work when testing the operation of the Self-Attention algorithm. To run the new test, we will take attention_test.mq5 from the previous test and create a copy of it named attention_test2.mq5.

When creating a new class for multi-head attention, we largely inherited processes from the Self-Attention algorithm. In some cases, methods were inherited entirely, while in others they used the Self-Attention methods as a foundation and created new functionality through minor adjustments. So here, the testing script will not require major changes, and all changes will affect only the block for declaring a new layer.

Our first change is, of course, the type of neural layer we are creating. In the type parameter, we will specify the defNeuronMHAttention constant, which corresponds to the multi-head attention class.

We also need to indicate the number of attention heads used. We will specify this value in the step parameter. I agree that the name of the parameter is not at all consonant. However, I decided not to create an additional parameter but to use the available free fields instead.

After that, we will once again go through the script code and carefully examine the key checkpoints for executing operations.

That's it. Such changes are sufficient for the first test to evaluate the net impact of the solution architecture on the model results.

//--- Attention layer

|

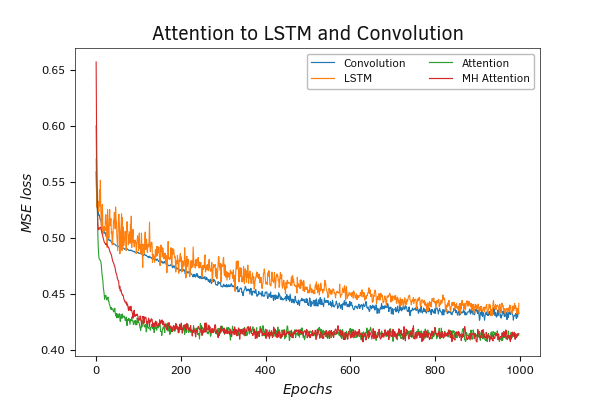

We conducted the testing directly on the same training dataset, keeping all other model parameters unchanged. Their results are shown in the graph below.

We have seen that even the use of Self-Attention gives us superiority over the previously considered architectural solutions of convolutional and recurrent models. Increasing the attention heads also yields a positive result.

The presented graphs depicting the neural network error dynamics on the training dataset clearly show that models using the attention mechanism train much faster than other models. Increasing the number of parameters when adding attention heads requires slightly more training time. However, this increase is not critical. At the same time, additional attention heads can reduce the error in the model operation.

Comparative Testing of Attention Models

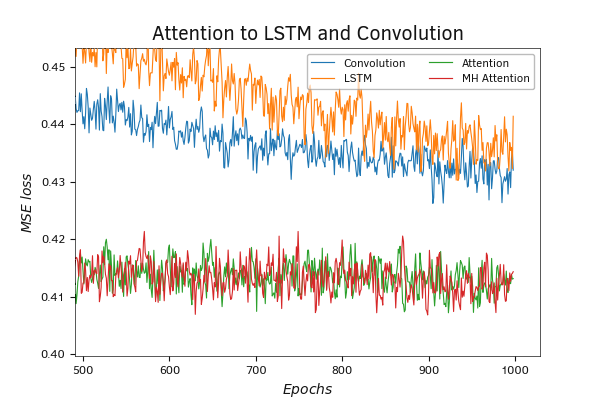

If we zoom in we can clearly see that the error of models using the attention mechanism remains lower throughout the entire training. At the same time, the use of additional attention heads further improves the performance.

Comparative Testing of Attention Models

Note that the model using the convolutional layer has the highest number of trainable parameters. This provides an additional reason to reconsider the rationality of resource usage and start exploring new technologies that emerge every day.

When talking about the rational use of resources, I also want to caution against an inadequate increase in the number of attention heads being used. Each attention head means the consumption of additional resources. Find a balance between the amount of resources consumed, and the benefits that they give to the overall result. There is no universal answer. Such a decision should be made on a case-by-case basis.

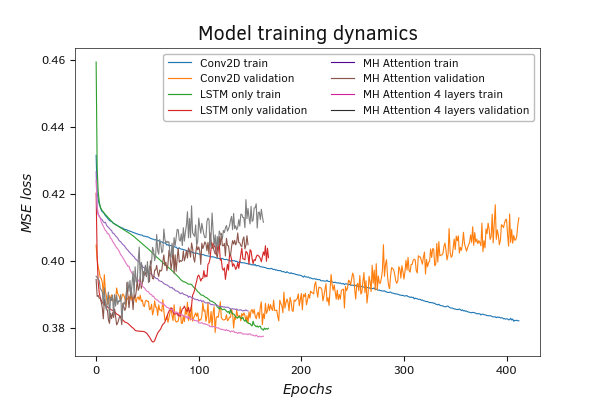

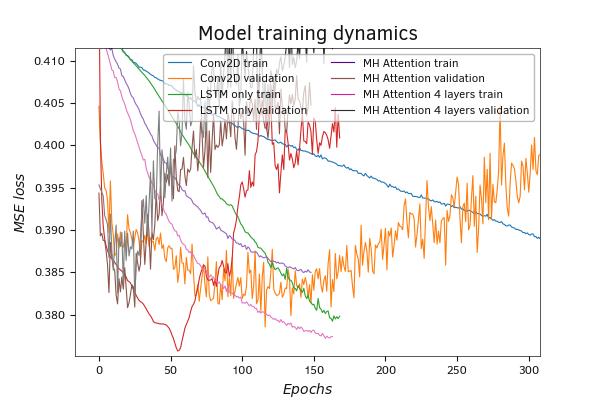

The results of test training of models written in Python also confirm the above conclusions. Models that employ attention mechanisms train faster and are also less susceptible to model overfitting. This is confirmed by a smaller gap between the error graphs for training and validation. Increasing the number of used attention layers allows the reduction of the overall model error under otherwise equal conditions.

As you zoom in, you'll notice that models using attention mechanisms have straighter lines and fewer breaks. This indicates a clearer identification of dependencies and a progressive movement towards minimizing error. Partly, this can be explained by the normalization of results within the Self-Attention block which allows you to have a result with the same statistical indicators at the output.

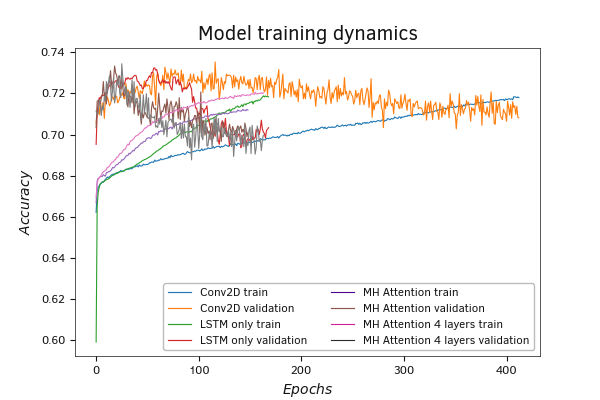

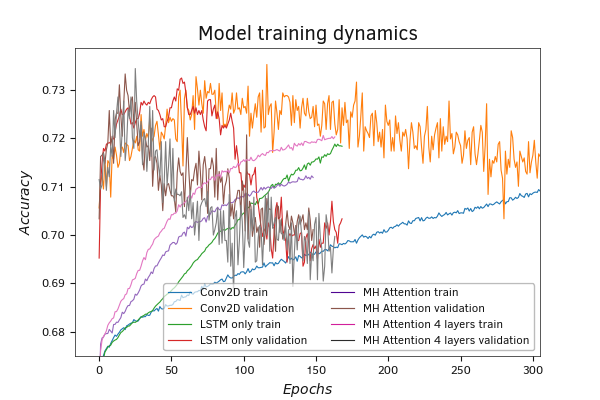

The graph of the test results for the Accuracy metric also confirms our conclusions.

Results of test training of Python attention models

Results of test training of Python attention models

Results of test training of Python attention models

Results of test training of Python attention models