What is the point of your indicator, comrade... it resembles an ordinary average.

I guess the meaning is in the name )

What is the point of your indicator, comrade... it resembles an ordinary average.

Opened the code - couldn't figure it out....)))

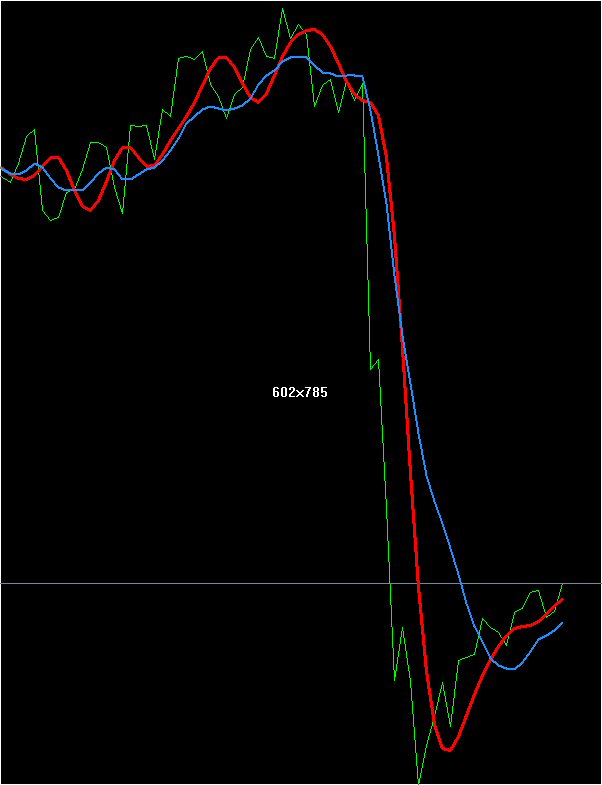

The point of this development is to create an indicator with good smoothing and minimal lag. This indicator is just a starting point, a draft strategy that I decided to implement. In many posts I described my approach to creation of indicators, in this one I decided to generalize the material of this direction of my works and I will show the results of this indicator development in the future, as I am developing this strategy. I think the majority of readers of this thread haven't dealt with formalized neural networks, while I often refer to them.

To forte928: You are right, this is exactly what I intend to struggle with. With ordinary Mashas it's difficult to do anything, when implemented on neural networks when prediction elements and compensating feedback system are embedded into algorithms - there's a chance to get decent performance.

To luka: This is also one of advantages of this approach. There is no need to be sophisticated in security and coding methods, even in open source it is impossible to unravel the strategy.

Piligrimm, give a definition of "formalised neural networks", it's more revealing.

In this case I am using the PolyAnalyst package for my development, which allows me, after training a neural network, to represent the relationship between inputs and outputs as a polynomial, this polynomial is the formalised neural network.

In this case I am using the PolyAnalyst package for my development, which allows me, after training a neural network, to represent the relationship between inputs and outputs as a polynomial, this polynomial is the formalised neural network.

Pretty words don't make the picture more sound, what you offer will hardly make something sensible. Everything should have a sense on the input and output. Apparently it is missing there and there.

Indeed, Butterworth's 2nd order LPF (red line) shows not much worse results compared to your neural network filter. By the way, where is the NS in the code, and why is your child redrawing? This is a rhetorical question. Since, with redrawing, what we see on the story does not correspond to reality, the real question arises: why are you showing us something that is not really there?

Indeed, Butterworth's 2nd order LPF (red line) does not show much worse results

Can you please tell me where I can get a Butterworth LPF?

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

In the meantime, I made a rough draft of an indicator on a formalised neronal network today. At first glance, it has a chance to become a good indicator. In any case, it has a great potential for improvement, we can significantly increase the smoothness and introduce additional signals. I am working on the debugging of one TS, not everything is going well and I will not have time to perform serious tests and build my TS with this indicator.

If anyone is interested in this indicator, and there will be serious suggestions for cooperation and the creation of TS on it, I am ready to finish it for a specific strategy, it will not take too much time.