Building Your First Glass-box Model Using Python And MQL5

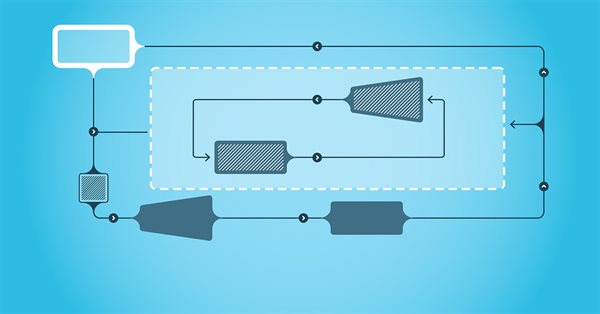

Machine learning models are difficult to interpret and understanding why our models deviate from our expectations is critical if we want to gain any value from using such advanced techniques. Without comprehensive insight into the inner workings of our model, we might fail to spot bugs that are corrupting our model's performance, we may waste time over engineering features that aren't predictive and in the long run we risk underutilizing the power of these models. Fortunately, there is a sophisticated and well maintained all in one solution that allows us to see exactly what our model is doing underneath the hood.

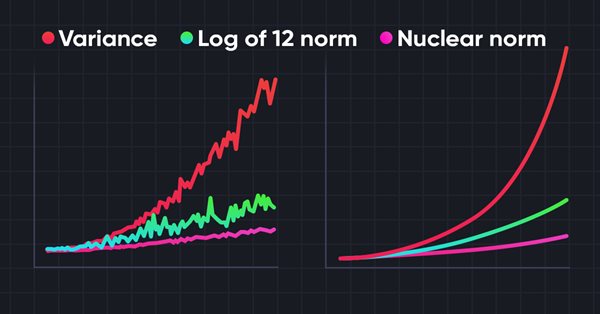

Neural networks made easy (Part 56): Using nuclear norm to drive research

The study of the environment in reinforcement learning is a pressing problem. We have already looked at some approaches previously. In this article, we will have a look at yet another method based on maximizing the nuclear norm. It allows agents to identify environmental states with a high degree of novelty and diversity.

Data Science and Machine Learning (Part 17): Money in the Trees? The Art and Science of Random Forests in Forex Trading

Discover the secrets of algorithmic alchemy as we guide you through the blend of artistry and precision in decoding financial landscapes. Unearth how Random Forests transform data into predictive prowess, offering a unique perspective on navigating the complex terrain of stock markets. Join us on this journey into the heart of financial wizardry, where we demystify the role of Random Forests in shaping market destiny and unlocking the doors to lucrative opportunities

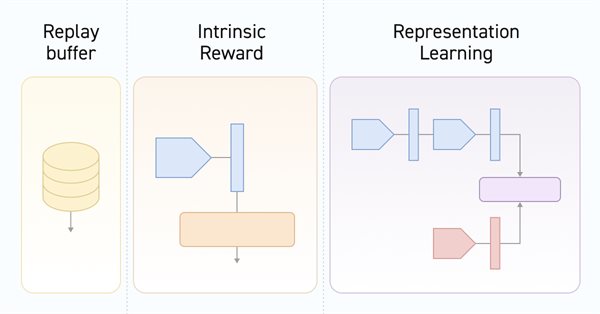

Neural networks made easy (Part 55): Contrastive intrinsic control (CIC)

Contrastive training is an unsupervised method of training representation. Its goal is to train a model to highlight similarities and differences in data sets. In this article, we will talk about using contrastive training approaches to explore different Actor skills.

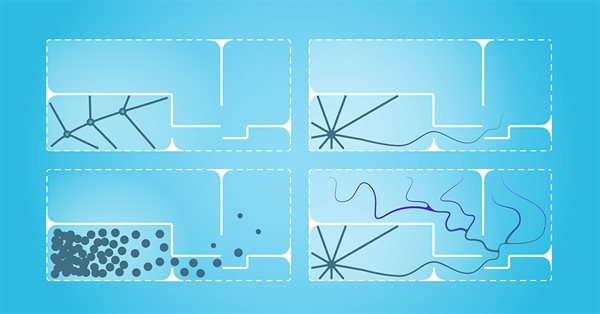

MQL5 Wizard Techniques you should know (Part 09): Pairing K-Means Clustering with Fractal Waves

K-Means clustering takes the approach to grouping data points as a process that’s initially focused on the macro view of a data set that uses random generated cluster centroids before zooming in and adjusting these centroids to accurately represent the data set. We will look at this and exploit a few of its use cases.

Filtering and feature extraction in the frequency domain

In this article we explore the application of digital filters on time series represented in the frequency domain so as to extract unique features that may be useful to prediction models.

Data Science and Machine Learning (Part 16): A Refreshing Look at Decision Trees

Dive into the intricate world of decision trees in the latest installment of our Data Science and Machine Learning series. Tailored for traders seeking strategic insights, this article serves as a comprehensive recap, shedding light on the powerful role decision trees play in the analysis of market trends. Explore the roots and branches of these algorithmic trees, unlocking their potential to enhance your trading decisions. Join us for a refreshing perspective on decision trees and discover how they can be your allies in navigating the complexities of financial markets.

Neural networks made easy (Part 54): Using random encoder for efficient research (RE3)

Whenever we consider reinforcement learning methods, we are faced with the issue of efficiently exploring the environment. Solving this issue often leads to complication of the algorithm and training of additional models. In this article, we will look at an alternative approach to solving this problem.

Data label for time series mining (Part 4):Interpretability Decomposition Using Label Data

This series of articles introduces several time series labeling methods, which can create data that meets most artificial intelligence models, and targeted data labeling according to needs can make the trained artificial intelligence model more in line with the expected design, improve the accuracy of our model, and even help the model make a qualitative leap!

MQL5 Wizard Techniques you should know (Part 08): Perceptrons

Perceptrons, single hidden layer networks, can be a good segue for anyone familiar with basic automated trading and is looking to dip into neural networks. We take a step by step look at how this could be realized in a signal class assembly that is part of the MQL5 Wizard classes for expert advisors.

Neural networks made easy (Part 53): Reward decomposition

We have already talked more than once about the importance of correctly selecting the reward function, which we use to stimulate the desired behavior of the Agent by adding rewards or penalties for individual actions. But the question remains open about the decryption of our signals by the Agent. In this article, we will talk about reward decomposition in terms of transmitting individual signals to the trained Agent.

Introduction to MQL5 (Part 1): A Beginner's Guide into Algorithmic Trading

Dive into the fascinating realm of algorithmic trading with our beginner-friendly guide to MQL5 programming. Discover the essentials of MQL5, the language powering MetaTrader 5, as we demystify the world of automated trading. From understanding the basics to taking your first steps in coding, this article is your key to unlocking the potential of algorithmic trading even without a programming background. Join us on a journey where simplicity meets sophistication in the exciting universe of MQL5.

Neural networks made easy (Part 52): Research with optimism and distribution correction

As the model is trained based on the experience reproduction buffer, the current Actor policy moves further and further away from the stored examples, which reduces the efficiency of training the model as a whole. In this article, we will look at the algorithm of improving the efficiency of using samples in reinforcement learning algorithms.

The case for using a Composite Data Set this Q4 in weighing SPDR XLY's next performance

We consider XLY, SPDR’s consumer discretionary spending ETF and see if with tools in MetaTrader’s IDE we can sift through an array of data sets in selecting what could work with a forecasting model with a forward outlook of not more than a year.

Neural networks made easy (Part 51): Behavior-Guided Actor-Critic (BAC)

The last two articles considered the Soft Actor-Critic algorithm, which incorporates entropy regularization into the reward function. This approach balances environmental exploration and model exploitation, but it is only applicable to stochastic models. The current article proposes an alternative approach that is applicable to both stochastic and deterministic models.

Neural networks made easy (Part 50): Soft Actor-Critic (model optimization)

In the previous article, we implemented the Soft Actor-Critic algorithm, but were unable to train a profitable model. Here we will optimize the previously created model to obtain the desired results.

The case for using Hospital-Performance Data with Perceptrons, this Q4, in weighing SPDR XLV's next Performance

XLV is SPDR healthcare ETF and in an age where it is common to be bombarded by a wide array of traditional news items plus social media feeds, it can be pressing to select a data set for use with a model. We try to tackle this problem for this ETF by sizing up some of its critical data sets in MQL5.

Data Science and Machine Learning (Part 15): SVM, A Must-Have Tool in Every Trader's Toolbox

Discover the indispensable role of Support Vector Machines (SVM) in shaping the future of trading. This comprehensive guide explores how SVM can elevate your trading strategies, enhance decision-making, and unlock new opportunities in the financial markets. Dive into the world of SVM with real-world applications, step-by-step tutorials, and expert insights. Equip yourself with the essential tool that can help you navigate the complexities of modern trading. Elevate your trading game with SVM—a must-have for every trader's toolbox.

Regression models of the Scikit-learn Library and their export to ONNX

In this article, we will explore the application of regression models from the Scikit-learn package, attempt to convert them into ONNX format, and use the resultant models within MQL5 programs. Additionally, we will compare the accuracy of the original models with their ONNX versions for both float and double precision. Furthermore, we will examine the ONNX representation of regression models, aiming to provide a better understanding of their internal structure and operational principles.

Neural networks made easy (Part 49): Soft Actor-Critic

We continue our discussion of reinforcement learning algorithms for solving continuous action space problems. In this article, I will present the Soft Actor-Critic (SAC) algorithm. The main advantage of SAC is the ability to find optimal policies that not only maximize the expected reward, but also have maximum entropy (diversity) of actions.

Neural networks made easy (Part 48): Methods for reducing overestimation of Q-function values

In the previous article, we introduced the DDPG method, which allows training models in a continuous action space. However, like other Q-learning methods, DDPG is prone to overestimating Q-function values. This problem often results in training an agent with a suboptimal strategy. In this article, we will look at some approaches to overcome the mentioned issue.

Neural networks made easy (Part 47): Continuous action space

In this article, we expand the range of tasks of our agent. The training process will include some aspects of money and risk management, which are an integral part of any trading strategy.

MQL5 Wizard Techniques you should know (Part 07): Dendrograms

Data classification for purposes of analysis and forecasting is a very diverse arena within machine learning and it features a large number of approaches and methods. This piece looks at one such approach, namely Agglomerative Hierarchical Classification.

Neural networks made easy (Part 46): Goal-conditioned reinforcement learning (GCRL)

In this article, we will have a look at yet another reinforcement learning approach. It is called goal-conditioned reinforcement learning (GCRL). In this approach, an agent is trained to achieve different goals in specific scenarios.

Neural networks made easy (Part 45): Training state exploration skills

Training useful skills without an explicit reward function is one of the main challenges in hierarchical reinforcement learning. Previously, we already got acquainted with two algorithms for solving this problem. But the question of the completeness of environmental research remains open. This article demonstrates a different approach to skill training, the use of which directly depends on the current state of the system.

Neural networks made easy (Part 44): Learning skills with dynamics in mind

In the previous article, we introduced the DIAYN method, which offers the algorithm for learning a variety of skills. The acquired skills can be used for various tasks. But such skills can be quite unpredictable, which can make them difficult to use. In this article, we will look at an algorithm for learning predictable skills.

Neural networks made easy (Part 43): Mastering skills without the reward function

The problem of reinforcement learning lies in the need to define a reward function. It can be complex or difficult to formalize. To address this problem, activity-based and environment-based approaches are being explored to learn skills without an explicit reward function.

Neural networks made easy (Part 42): Model procrastination, reasons and solutions

In the context of reinforcement learning, model procrastination can be caused by several reasons. The article considers some of the possible causes of model procrastination and methods for overcoming them.

Integrate Your Own LLM into EA (Part 2): Example of Environment Deployment

With the rapid development of artificial intelligence today, language models (LLMs) are an important part of artificial intelligence, so we should think about how to integrate powerful LLMs into our algorithmic trading. For most people, it is difficult to fine-tune these powerful models according to their needs, deploy them locally, and then apply them to algorithmic trading. This series of articles will take a step-by-step approach to achieve this goal.

Neural networks made easy (Part 41): Hierarchical models

The article describes hierarchical training models that offer an effective approach to solving complex machine learning problems. Hierarchical models consist of several levels, each of which is responsible for different aspects of the task.

Neural networks made easy (Part 40): Using Go-Explore on large amounts of data

This article discusses the use of the Go-Explore algorithm over a long training period, since the random action selection strategy may not lead to a profitable pass as training time increases.

Integrate Your Own LLM into EA (Part 1): Hardware and Environment Deployment

With the rapid development of artificial intelligence today, language models (LLMs) are an important part of artificial intelligence, so we should think about how to integrate powerful LLMs into our algorithmic trading. For most people, it is difficult to fine-tune these powerful models according to their needs, deploy them locally, and then apply them to algorithmic trading. This series of articles will take a step-by-step approach to achieve this goal.

Mastering ONNX: The Game-Changer for MQL5 Traders

Dive into the world of ONNX, the powerful open-standard format for exchanging machine learning models. Discover how leveraging ONNX can revolutionize algorithmic trading in MQL5, allowing traders to seamlessly integrate cutting-edge AI models and elevate their strategies to new heights. Uncover the secrets to cross-platform compatibility and learn how to unlock the full potential of ONNX in your MQL5 trading endeavors. Elevate your trading game with this comprehensive guide to Mastering ONNX

Data label for time series mining (Part 3):Example for using label data

This series of articles introduces several time series labeling methods, which can create data that meets most artificial intelligence models, and targeted data labeling according to needs can make the trained artificial intelligence model more in line with the expected design, improve the accuracy of our model, and even help the model make a qualitative leap!

Category Theory in MQL5 (Part 23): A different look at the Double Exponential Moving Average

In this article we continue with our theme in the last of tackling everyday trading indicators viewed in a ‘new’ light. We are handling horizontal composition of natural transformations for this piece and the best indicator for this, that expands on what we just covered, is the double exponential moving average (DEMA).

Classification models in the Scikit-Learn library and their export to ONNX

In this article, we will explore the application of all classification models available in the Scikit-Learn library to solve the classification task of Fisher's Iris dataset. We will attempt to convert these models into ONNX format and utilize the resulting models in MQL5 programs. Additionally, we will compare the accuracy of the original models with their ONNX versions on the full Iris dataset.

Category Theory in MQL5 (Part 22): A different look at Moving Averages

In this article we attempt to simplify our illustration of concepts covered in these series by dwelling on just one indicator, the most common and probably the easiest to understand. The moving average. In doing so we consider significance and possible applications of vertical natural transformations.

Neural networks made easy (Part 39): Go-Explore, a different approach to exploration

We continue studying the environment in reinforcement learning models. And in this article we will look at another algorithm – Go-Explore, which allows you to effectively explore the environment at the model training stage.

Neural networks made easy (Part 38): Self-Supervised Exploration via Disagreement

One of the key problems within reinforcement learning is environmental exploration. Previously, we have already seen the research method based on Intrinsic Curiosity. Today I propose to look at another algorithm: Exploration via Disagreement.

Category Theory in MQL5 (Part 21): Natural Transformations with LDA

This article, the 21st in our series, continues with a look at Natural Transformations and how they can be implemented using linear discriminant analysis. We present applications of this in a signal class format, like in the previous article.