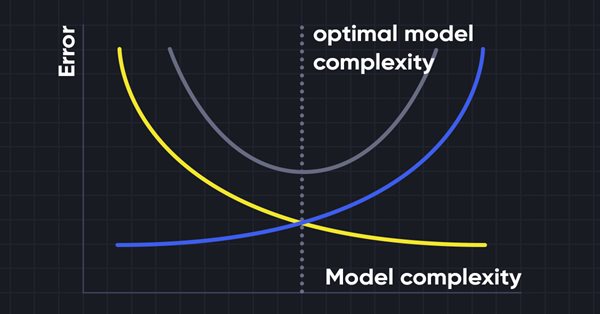

Data Science and Machine Learning (Part 10): Ridge Regression

Ridge regression is a simple technique to reduce model complexity and prevent over-fitting which may result from simple linear regression

Population optimization algorithms: Grey Wolf Optimizer (GWO)

Let's consider one of the newest modern optimization algorithms - Grey Wolf Optimization. The original behavior on test functions makes this algorithm one of the most interesting among the ones considered earlier. This is one of the top algorithms for use in training neural networks, smooth functions with many variables.

Population optimization algorithms: Artificial Bee Colony (ABC)

In this article, we will study the algorithm of an artificial bee colony and supplement our knowledge with new principles of studying functional spaces. In this article, I will showcase my interpretation of the classic version of the algorithm.

Neural networks made easy (Part 32): Distributed Q-Learning

We got acquainted with the Q-learning method in one of the earlier articles within this series. This method averages rewards for each action. Two works were presented in 2017, which show greater success when studying the reward distribution function. Let's consider the possibility of using such technology to solve our problems.

Population optimization algorithms: Ant Colony Optimization (ACO)

This time I will analyze the Ant Colony optimization algorithm. The algorithm is very interesting and complex. In the article, I make an attempt to create a new type of ACO.

Category Theory in MQL5 (Part 1)

Category Theory is a diverse and expanding branch of Mathematics which as of yet is relatively uncovered in the MQL community. These series of articles look to introduce and examine some of its concepts with the overall goal of establishing an open library that attracts comments and discussion while hopefully furthering the use of this remarkable field in Traders' strategy development.

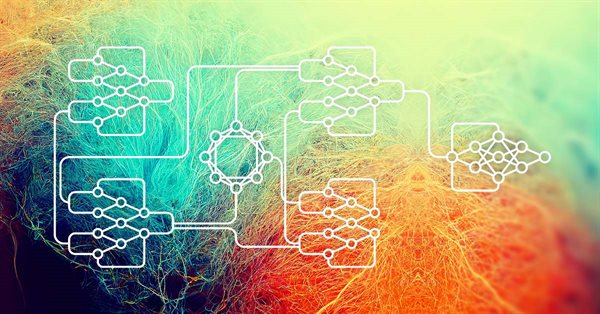

Neural networks made easy (Part 31): Evolutionary algorithms

In the previous article, we started exploring non-gradient optimization methods. We got acquainted with the genetic algorithm. Today, we will continue this topic and will consider another class of evolutionary algorithms.

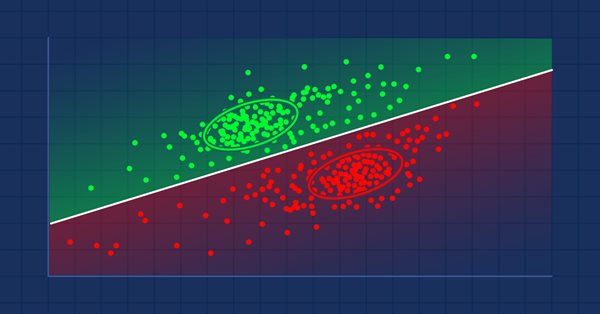

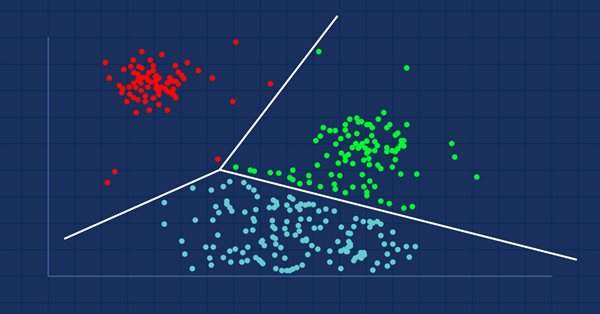

MQL5 Wizard techniques you should know (Part 04): Linear Discriminant Analysis

Todays trader is a philomath who is almost always looking up new ideas, trying them out, choosing to modify them or discard them; an exploratory process that should cost a fair amount of diligence. These series of articles will proposition that the MQL5 wizard should be a mainstay for traders in this effort.

Neural networks made easy (Part 30): Genetic algorithms

Today I want to introduce you to a slightly different learning method. We can say that it is borrowed from Darwin's theory of evolution. It is probably less controllable than the previously discussed methods but it allows training non-differentiable models.

Neural networks made easy (Part 29): Advantage Actor-Critic algorithm

In the previous articles of this series, we have seen two reinforced learning algorithms. Each of them has its own advantages and disadvantages. As often happens in such cases, next comes the idea to combine both methods into an algorithm, using the best of the two. This would compensate for the shortcomings of each of them. One of such methods will be discussed in this article.

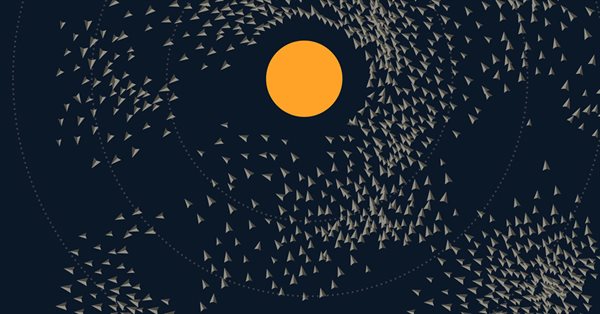

Population optimization algorithms: Particle swarm (PSO)

In this article, I will consider the popular Particle Swarm Optimization (PSO) algorithm. Previously, we discussed such important characteristics of optimization algorithms as convergence, convergence rate, stability, scalability, as well as developed a test stand and considered the simplest RNG algorithm.

Neural networks made easy (Part 28): Policy gradient algorithm

We continue to study reinforcement learning methods. In the previous article, we got acquainted with the Deep Q-Learning method. In this method, the model is trained to predict the upcoming reward depending on the action taken in a particular situation. Then, an action is performed in accordance with the policy and the expected reward. But it is not always possible to approximate the Q-function. Sometimes its approximation does not generate the desired result. In such cases, approximation methods are applied not to utility functions, but to a direct policy (strategy) of actions. One of such methods is Policy Gradient.

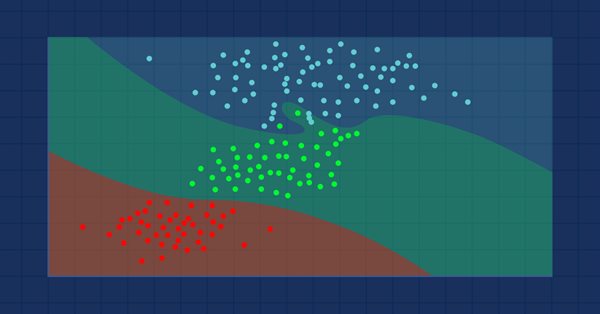

Data Science and Machine Learning (Part 09): The K-Nearest Neighbors Algorithm (KNN)

This is a lazy algorithm that doesn't learn from the training dataset, it stores the dataset instead and acts immediately when it's given a new sample. As simple as it is, it is used in a variety of real-world applications.

Neural networks made easy (Part 27): Deep Q-Learning (DQN)

We continue to study reinforcement learning. In this article, we will get acquainted with the Deep Q-Learning method. The use of this method has enabled the DeepMind team to create a model that can outperform a human when playing Atari computer games. I think it will be useful to evaluate the possibilities of the technology for solving trading problems.

Neural networks made easy (Part 26): Reinforcement Learning

We continue to study machine learning methods. With this article, we begin another big topic, Reinforcement Learning. This approach allows the models to set up certain strategies for solving the problems. We can expect that this property of reinforcement learning will open up new horizons for building trading strategies.

Neural networks made easy (Part 25): Practicing Transfer Learning

In the last two articles, we developed a tool for creating and editing neural network models. Now it is time to evaluate the potential use of Transfer Learning technology using practical examples.

Neural networks made easy (Part 24): Improving the tool for Transfer Learning

In the previous article, we created a tool for creating and editing the architecture of neural networks. Today we will continue working on this tool. We will try to make it more user friendly. This may see, top be a step away form our topic. But don't you think that a well organized workspace plays an important role in achieving the result.

Data Science and Machine Learning (Part 08): K-Means Clustering in plain MQL5

Data mining is crucial to a data scientist and a trader because very often, the data isn't as straightforward as we think it is. The human eye can not understand the minor underlying pattern and relationships in the dataset, maybe the K-means algorithm can help us with that. Let's find out...

Neural networks made easy (Part 23): Building a tool for Transfer Learning

In this series of articles, we have already mentioned Transfer Learning more than once. However, this was only mentioning. in this article, I suggest filling this gap and taking a closer look at Transfer Learning.

Neural networks made easy (Part 22): Unsupervised learning of recurrent models

We continue to study unsupervised learning algorithms. This time I suggest that we discuss the features of autoencoders when applied to recurrent model training.

Neural networks made easy (Part 21): Variational autoencoders (VAE)

In the last article, we got acquainted with the Autoencoder algorithm. Like any other algorithm, it has its advantages and disadvantages. In its original implementation, the autoenctoder is used to separate the objects from the training sample as much as possible. This time we will talk about how to deal with some of its disadvantages.

Data Science and Machine Learning (Part 07): Polynomial Regression

Unlike linear regression, polynomial regression is a flexible model aimed to perform better at tasks the linear regression model could not handle, Let's find out how to make polynomial models in MQL5 and make something positive out of it.

Experiments with neural networks (Part 2): Smart neural network optimization

In this article, I will use experimentation and non-standard approaches to develop a profitable trading system and check whether neural networks can be of any help for traders. MetaTrader 5 as a self-sufficient tool for using neural networks in trading.

Neural networks made easy (Part 20): Autoencoders

We continue to study unsupervised learning algorithms. Some readers might have questions regarding the relevance of recent publications to the topic of neural networks. In this new article, we get back to studying neural networks.

MQL5 Wizard techniques you should know (Part 03): Shannon's Entropy

Todays trader is a philomath who is almost always looking up new ideas, trying them out, choosing to modify them or discard them; an exploratory process that should cost a fair amount of diligence. These series of articles will proposition that the MQL5 wizard should be a mainstay for traders.

Neural networks made easy (Part 19): Association rules using MQL5

We continue considering association rules. In the previous article, we have discussed theoretical aspect of this type of problem. In this article, I will show the implementation of the FP Growth method using MQL5. We will also test the implemented solution using real data.

Neural networks made easy (Part 18): Association rules

As a continuation of this series of articles, let's consider another type of problems within unsupervised learning methods: mining association rules. This problem type was first used in retail, namely supermarkets, to analyze market baskets. In this article, we will talk about the applicability of such algorithms in trading.

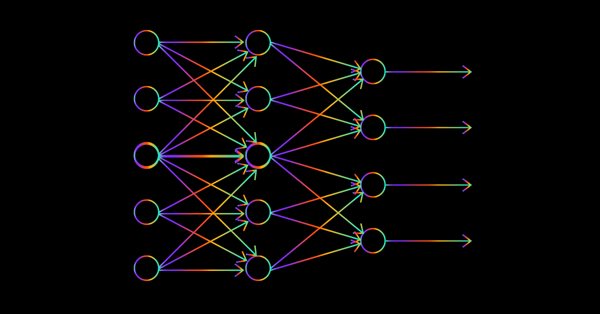

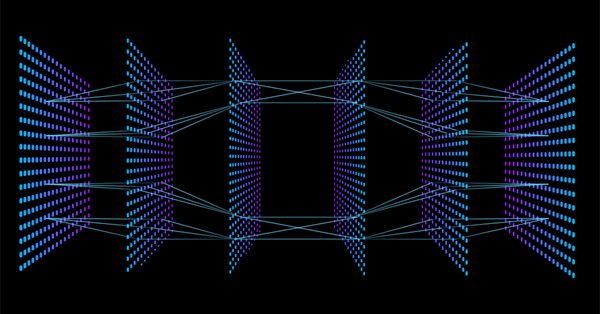

Data Science and Machine Learning — Neural Network (Part 02): Feed forward NN Architectures Design

There are minor things to cover on the feed-forward neural network before we are through, the design being one of them. Let's see how we can build and design a flexible neural network to our inputs, the number of hidden layers, and the nodes for each of the network.

Metamodels in machine learning and trading: Original timing of trading orders

Metamodels in machine learning: Auto creation of trading systems with little or no human intervention — The model decides when and how to trade on its own.

Data Science and Machine Learning — Neural Network (Part 01): Feed Forward Neural Network demystified

Many people love them but a few understand the whole operations behind Neural Networks. In this article I will try to explain everything that goes behind closed doors of a feed-forward multi-layer perception in plain English.

Experiments with neural networks (Part 1): Revisiting geometry

In this article, I will use experimentation and non-standard approaches to develop a profitable trading system and check whether neural networks can be of any help for traders.

Neural networks made easy (Part 17): Dimensionality reduction

In this part we continue discussing Artificial Intelligence models. Namely, we study unsupervised learning algorithms. We have already discussed one of the clustering algorithms. In this article, I am sharing a variant of solving problems related to dimensionality reduction.

Data Science and Machine Learning (Part 06): Gradient Descent

The gradient descent plays a significant role in training neural networks and many machine learning algorithms. It is a quick and intelligent algorithm despite its impressive work it is still misunderstood by a lot of data scientists let's see what it is all about.

Neural networks made easy (Part 16): Practical use of clustering

In the previous article, we have created a class for data clustering. In this article, I want to share variants of the possible application of obtained results in solving practical trading tasks.

Neural networks made easy (Part 15): Data clustering using MQL5

We continue to consider the clustering method. In this article, we will create a new CKmeans class to implement one of the most common k-means clustering methods. During tests, the model managed to identify about 500 patterns.

Neural networks made easy (Part 14): Data clustering

It has been more than a year since I published my last article. This is quite a lot time to revise ideas and to develop new approaches. In the new article, I would like to divert from the previously used supervised learning method. This time we will dip into unsupervised learning algorithms. In particular, we will consider one of the clustering algorithms—k-means.

Data Science and Machine Learning (Part 05): Decision Trees

Decision trees imitate the way humans think to classify data. Let's see how to build trees and use them to classify and predict some data. The main goal of the decision trees algorithm is to separate the data with impurity and into pure or close to nodes.

How to master Machine Learning

Check out this selection of useful materials which can assist traders in improving their algorithmic trading knowledge. The era of simple algorithms is passing, and it is becoming harder to succeed without the use of Machine Learning techniques and Neural Networks.

Data Science and Machine Learning (Part 04): Predicting Current Stock Market Crash

In this article I am going to attempt to use our logistic model to predict the stock market crash based upon the fundamentals of the US economy, the NETFLIX and APPLE are the stocks we are going to focus on, Using the previous market crashes of 2019 and 2020 let's see how our model will perform in the current dooms and glooms.

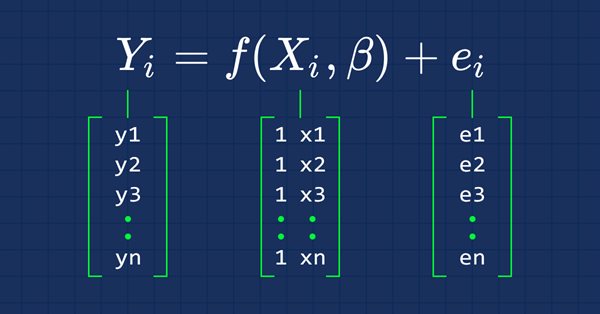

Data Science and Machine Learning (Part 03): Matrix Regressions

This time our models are being made by matrices, which allows flexibility while it allows us to make powerful models that can handle not only five independent variables but also many variables as long as we stay within the calculations limits of a computer, this article is going to be an interesting read, that's for sure.