Other classes in DoEasy library (Part 68): Chart window object class and indicator object classes in the chart window

In this article, I will continue the development of the chart object class. I will add the list of chart window objects featuring the lists of available indicators.

Neural networks made easy (Part 13): Batch Normalization

In the previous article, we started considering methods aimed at improving neural network training quality. In this article, we will continue this topic and will consider another approach — batch data normalization.

Other classes in DoEasy library (Part 67): Chart object class

In this article, I will create the chart object class (of a single trading instrument chart) and improve the collection class of MQL5 signal objects so that each signal object stored in the collection updates all its parameters when updating the list.

Other classes in DoEasy library (Part 66): MQL5.com Signals collection class

In this article, I will create the signal collection class of the MQL5.com Signals service with the functions for managing signals. Besides, I will improve the Depth of Market snapshot object class for displaying the total DOM buy and sell volumes.

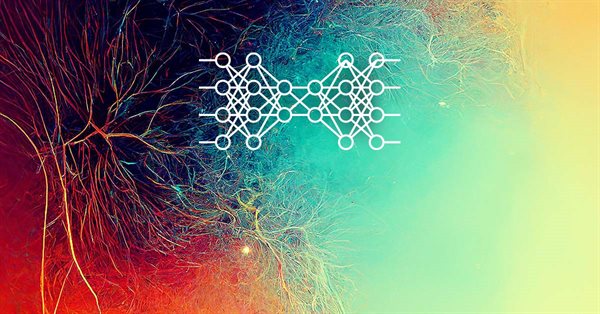

Neural networks made easy (Part 12): Dropout

As the next step in studying neural networks, I suggest considering the methods of increasing convergence during neural network training. There are several such methods. In this article we will consider one of them entitled Dropout.

Prices in DoEasy library (Part 64): Depth of Market, classes of DOM snapshot and snapshot series objects

In this article, I will create two classes (the class of DOM snapshot object and the class of DOM snapshot series object) and test creation of the DOM data series.

Self-adapting algorithm (Part IV): Additional functionality and tests

I continue filling the algorithm with the minimum necessary functionality and testing the results. The profitability is quite low but the articles demonstrate the model of the fully automated profitable trading on completely different instruments traded on fundamentally different markets.

Useful and exotic techniques for automated trading

In this article I will demonstrate some very interesting and useful techniques for automated trading. Some of them may be familiar to you. I will try to cover the most interesting methods and will explain why they are worth using. Furthermore, I will show what these techniques are apt to in practice. We will create Expert Advisors and test all the described techniques using historic quotes.

Neural networks made easy (Part 11): A take on GPT

Perhaps one of the most advanced models among currently existing language neural networks is GPT-3, the maximal variant of which contains 175 billion parameters. Of course, we are not going to create such a monster on our home PCs. However, we can view which architectural solutions can be used in our work and how we can benefit from them.

Prices in DoEasy library (part 62): Updating tick series in real time, preparation for working with Depth of Market

In this article, I will implement updating tick data in real time and prepare the symbol object class for working with Depth of Market (DOM itself is to be implemented in the next article).

Self-adapting algorithm (Part III): Abandoning optimization

It is impossible to get a truly stable algorithm if we use optimization based on historical data to select parameters. A stable algorithm should be aware of what parameters are needed when working on any trading instrument at any time. It should not forecast or guess, it should know for sure.

Practical application of neural networks in trading (Part 2). Computer vision

The use of computer vision allows training neural networks on the visual representation of the price chart and indicators. This method enables wider operations with the whole complex of technical indicators, since there is no need to feed them digitally into the neural network.

Neural networks made easy (Part 10): Multi-Head Attention

We have previously considered the mechanism of self-attention in neural networks. In practice, modern neural network architectures use several parallel self-attention threads to find various dependencies between the elements of a sequence. Let us consider the implementation of such an approach and evaluate its impact on the overall network performance.

Developing a self-adapting algorithm (Part II): Improving efficiency

In this article, I will continue the development of the topic by improving the flexibility of the previously created algorithm. The algorithm became more stable with an increase in the number of candles in the analysis window or with an increase in the threshold percentage of the overweight of falling or growing candles. I had to make a compromise and set a larger sample size for analysis or a larger percentage of the prevailing candle excess.

Brute force approach to pattern search (Part III): New horizons

This article provides a continuation to the brute force topic, and it introduces new opportunities for market analysis into the program algorithm, thereby accelerating the speed of analysis and improving the quality of results. New additions enable the highest-quality view of global patterns within this approach.

Developing a self-adapting algorithm (Part I): Finding a basic pattern

In the upcoming series of articles, I will demonstrate the development of self-adapting algorithms considering most market factors, as well as show how to systematize these situations, describe them in logic and take them into account in your trading activity. I will start with a very simple algorithm that will gradually acquire theory and evolve into a very complex project.

Neural networks made easy (Part 8): Attention mechanisms

In previous articles, we have already tested various options for organizing neural networks. We also considered convolutional networks borrowed from image processing algorithms. In this article, I suggest considering Attention Mechanisms, the appearance of which gave impetus to the development of language models.

Prices in DoEasy library (part 59): Object to store data of one tick

From this article on, start creating library functionality to work with price data. Today, create an object class which will store all price data which arrived with yet another tick.

Timeseries in DoEasy library (part 58): Timeseries of indicator buffer data

In conclusion of the topic of working with timeseries organise storage, search and sort of data stored in indicator buffers which will allow to further perform the analysis based on values of the indicators to be created on the library basis in programs. The general concept of all collection classes of the library allows to easily find necessary data in the corresponding collection. Respectively, the same will be possible in the class created today.

Brute force approach to pattern search (Part II): Immersion

In this article we will continue discussing the brute force approach. I will try to provide a better explanation of the pattern using the new improved version of my application. I will also try to find the difference in stability using different time intervals and timeframes.

Neural networks made easy (Part 7): Adaptive optimization methods

In previous articles, we used stochastic gradient descent to train a neural network using the same learning rate for all neurons within the network. In this article, I propose to look towards adaptive learning methods which enable changing of the learning rate for each neuron. We will also consider the pros and cons of this approach.

Neural networks made easy (Part 6): Experimenting with the neural network learning rate

We have previously considered various types of neural networks along with their implementations. In all cases, the neural networks were trained using the gradient decent method, for which we need to choose a learning rate. In this article, I want to show the importance of a correctly selected rate and its impact on the neural network training, using examples.

Timeseries in DoEasy library (part 57): Indicator buffer data object

In the article, develop an object which will contain all data of one buffer for one indicator. Such objects will be necessary for storing serial data of indicator buffers. With their help, it will be possible to sort and compare buffer data of any indicators, as well as other similar data with each other.

Timeseries in DoEasy library (part 56): Custom indicator object, get data from indicator objects in the collection

The article considers creation of the custom indicator object for the use in EAs. Let’s slightly improve library classes and add methods to get data from indicator objects in EAs.

Neural networks made easy (Part 5): Multithreaded calculations in OpenCL

We have earlier discussed some types of neural network implementations. In the considered networks, the same operations are repeated for each neuron. A logical further step is to utilize multithreaded computing capabilities provided by modern technology in an effort to speed up the neural network learning process. One of the possible implementations is described in this article.

Neural networks made easy (Part 4): Recurrent networks

We continue studying the world of neural networks. In this article, we will consider another type of neural networks, recurrent networks. This type is proposed for use with time series, which are represented in the MetaTrader 5 trading platform by price charts.

Brute force approach to pattern search

In this article, we will search for market patterns, create Expert Advisors based on the identified patterns, and check how long these patterns remain valid, if they ever retain their validity.

Parallel Particle Swarm Optimization

The article describes a method of fast optimization using the particle swarm algorithm. It also presents the method implementation in MQL, which is ready for use both in single-threaded mode inside an Expert Advisor and in a parallel multi-threaded mode as an add-on that runs on local tester agents.

Neural networks made easy (Part 3): Convolutional networks

As a continuation of the neural network topic, I propose considering convolutional neural networks. This type of neural network are usually applied to analyzing visual imagery. In this article, we will consider the application of these networks in the financial markets.

Advanced resampling and selection of CatBoost models by brute-force method

This article describes one of the possible approaches to data transformation aimed at improving the generalizability of the model, and also discusses sampling and selection of CatBoost models.

A scientific approach to the development of trading algorithms

The article considers the methodology for developing trading algorithms, in which a consistent scientific approach is used to analyze possible price patterns and to build trading algorithms based on these patterns. Development ideals are demonstrated using examples.

CatBoost machine learning algorithm from Yandex with no Python or R knowledge required

The article provides the code and the description of the main stages of the machine learning process using a specific example. To obtain the model, you do not need Python or R knowledge. Furthermore, basic MQL5 knowledge is enough — this is exactly my level. Therefore, I hope that the article will serve as a good tutorial for a broad audience, assisting those interested in evaluating machine learning capabilities and in implementing them in their programs.

Custom symbols: Practical basics

The article is devoted to the programmatic generation of custom symbols which are used to demonstrate some popular methods for displaying quotes. It describes a suggested variant of minimally invasive adaptation of Expert Advisors for trading a real symbol from a derived custom symbol chart. MQL source codes are attached to this article.

Quick Manual Trading Toolkit: Working with open positions and pending orders

In this article, we will expand the capabilities of the toolkit: we will add the ability to close trade positions upon specific conditions and will create tables for controlling market and pending orders, with the ability to edit these orders.

Calculating mathematical expressions (Part 2). Pratt and shunting yard parsers

In this article, we consider the principles of mathematical expression parsing and evaluation using parsers based on operator precedence. We will implement Pratt and shunting-yard parser, byte-code generation and calculations by this code, as well as view how to use indicators as functions in expressions and how to set up trading signals in Expert Advisors based on these indicators.

Quick Manual Trading Toolkit: Basic Functionality

Today, many traders switch to automated trading systems which can require additional setup or can be fully automated and ready to use. However, there is a considerable part of traders who prefer trading manually, in the old fashioned way. In this article, we will create toolkit for quick manual trading, using hotkeys, and for performing typical trading actions in one click.

Developing a cross-platform grid EA: testing a multi-currency EA

Markets dropped down by more that 30% within one month. It seems to be the best time for testing grid- and martingale-based Expert Advisors. This article is an unplanned continuation of the series "Creating a Cross-Platform Grid EA". The current market provides an opportunity to arrange a stress rest for the grid EA. So, let's use this opportunity and test our Expert Advisor.

Applying OLAP in trading (part 4): Quantitative and visual analysis of tester reports

The article offers basic tools for the OLAP analysis of tester reports relating to single passes and optimization results. The tool can work with standard format files (tst and opt), and it also provides a graphical interface. MQL source codes are attached below.

Forecasting Time Series (Part 2): Least-Square Support-Vector Machine (LS-SVM)

This article deals with the theory and practical application of the algorithm for forecasting time series, based on support-vector method. It also proposes its implementation in MQL and provides test indicators and Expert Advisors. This technology has not been implemented in MQL yet. But first, we have to get to know math for it.

Forecasting Time Series (Part 1): Empirical Mode Decomposition (EMD) Method

This article deals with the theory and practical use of the algorithm for forecasting time series, based on the empirical decomposition mode. It proposes the MQL implementation of this method and presents test indicators and Expert Advisors.