Discussing the article: "Neural networks made easy (Part 38): Self-Supervised Exploration via Disagreement"

Hello. It's already turning into a game of chance - trying to run another of your creations. I am not an expert in programming, but at least I was able to appreciate the depth of the topic and its vastness. I ran it in the strategy tester with Batch optimisation from 100 to 110. The indicator in the tray runs and nothing else happens for 12 hours. What am I doing wrong?

I'm going to look into the file changes in this compared to the last ones. I have seen the same issue.

When I try to remove the indicator from MT5 or if i'm doing debugging in meta editor, MetaTrader5 crashes right away.

It doesn't run, even after many hours

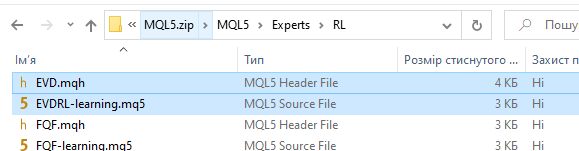

Your link doesn't have the said EA!!! There is only the old one from the last article. At first I thought that you just forgot to change the name when copying, but when comparing I found a complete match of the code!!!! CARAUL!!!

In the archive at the bottom of the article is attached a complete set of files. There are Expert Advisors from previous articles. But there is also the Expert Advisor mentioned in the article.

Sorry, one more amateur question. The Expert Advisor has not made a single trade in the tester. It is just hanging on the chart with no signs of activity. Why?

And one more thing. Are indicator data used only as an additional filter when making a trade?

Thank you very much for this article!

I see that you also offer a zip file with many RL experiments inside. Is there a specific mq5 file, which I can compile, run and evaluate in more detail?

Thank you very much!

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

Check out the new article: Neural networks made easy (Part 38): Self-Supervised Exploration via Disagreement.

One of the key problems within reinforcement learning is environmental exploration. Previously, we have already seen the research method based on Intrinsic Curiosity. Today I propose to look at another algorithm: Exploration via Disagreement.

Disagreement-based Exploration is a reinforcement learning method that allows an agent to explore environment without relying on external rewards, but rather by finding new, unexplored areas using an ensemble of models.

In the article "Self-Supervised Exploration via Disagreement", the authors describe this approach and propose a simple method: training an ensemble of forward dynamics models and encouraging the agent to explore the action space where there is maximum inconsistency or variance between the predictions of the models in the ensemble.

Thus, rather than choosing actions that produce the greatest expected reward, the agent chooses actions that maximize disagreement between models in the ensemble. This allows the agent to explore regions of state space where the models in the ensemble disagree and where there are likely to be new and unexplored regions of the environment.

In this case, all models in the ensemble converge to the mean, ultimately reducing the spread of the ensemble and providing the agent with more accurate predictions about the states of the environment and the possible consequences of actions.

In addition, the algorithm of exploration via disagreement allows the agent to successfully cope with the stochasticity of interaction with the environment. The results of experiments conducted by the authors of the article showed that the proposed approach actually improves exploration in stochastic environments and outperforms previously existing methods of intrinsic motivation and uncertainty modeling. In addition, they observed that their approach can be extended to supervised learning, where the value of a sample is determined not based on the ground truth label but based on the state of the ensemble of models.

Thus, the algorithm of exploration via disagreement represents a promising approach to solve the exploration problem in stochastic environments. It allows the agent to explore the environment more efficiently and without having to rely on external rewards, which can be especially useful in real-world applications where external rewards may be limited or costly.

Author: Dmitriy Gizlyk