Integrate Your Own LLM into EA (Part 1): Hardware and Environment Deployment

Introduction

This article is mainly aimed at those who hope to economically and practically deploy LLMs in a local environment and can fine-tune the model as needed. We will detail the required hardware configuration and software environment configuration, and provide some practical suggestions and tips.

We hope that by reading this article, you will have a clear understanding of the hardware conditions required to deploy LLMs, and be able to successfully build a basic deployment environment according to the guidance of this article.

Table of contents:

- Introduction

- What Is the LLMs

- Hardware Configuration

- Software Environment Configuration

- Cloud computing platform

- Conclusion

What Is the LLMs

1. About LLMS

LLMs (large language models) are fundamental models that utilize deep learning in natural language processing (NLP) and natural language generation (NLG) tasks. To help them learn the complexity and connections of language, large language models are pre-trained on large amounts of data. LLM is essentially a neural network based on a Transformer (Don't ask me what Transformer is, haven't you heard of " Attention is All You Need "?).By LLMs, we mainly refer to open-source models, such as LLama2. Like OpenAI's closed-source ChatGPT is powerful, but it can't take advantage of our personalized data. Of course, they can also be integrated with algorithmic trading, but that is not what this series of articles is about.

2. Why and How to Apply LLMs to Algorithmic Trading

Why did we choose LLMS? The reason is simple: they have strong logical understanding and reasoning skills. So here's the problem, how can we use this powerful capability in algorithmic trading? As a simple example, what would you do if you want to make an algorithmic trading by using the Fibonacci sequence? If you implement it with traditional programming methods, it's a brutal thing. But if we use LLMs, we only need to make the corresponding data set according to Fibonacci theory, and then use this data set to fine-tune the LLMs, and directly call the results generated by LLMs in our algorithmic trading. Everything just got easier.

3. Why Deployment LLMs Locally

1). Local deployment can provide higher data security. For some applications involving sensitive information, such as medical, financial and other fields, data security is crucial. By deploying LLMs in a local environment, we can ensure that data does not leave our control range.

2). Local deployment can provide higher performance. By choosing the appropriate hardware configuration, we can optimize the running efficiency of the model according to our needs. In addition, the network latency in the local environment is usually lower than that in the cloud environment, which is very important for some applications that require real-time response.

3). By deploying LLMs in a local environment and fine-tuning them according to our personalized data, we can get a model that better meets our needs. This is because each application scenario has its uniqueness, and through fine-tuning, we can make the model better adapt to our application scenario.

In summary, local deployment of LLMs can not only provide higher data security and performance but also allow us to get a model that better meets our needs. Therefore, understanding how to deploy LLMs in a local environment and fine-tune them according to our personalized data is very important for how we integrate into algorithmic trading. In the following content, we will detail how to choose hardware configuration and build software environment.

Hardware Configuration

When deploying LLMs locally, hardware configuration is a very important part. Here we mainly discuss mainstream PCs, and do not discuss MacOS and other niche products.

The products used to deploy LLMs mainly involve CPU, GPU, memory, and storage devices. Among them, the CPU and GPU are the main computing devices for running models, and memory and storage devices are used to store models and data.

The correct hardware configuration can not only ensure the running efficiency of the model but also affect the performance of the model to a certain extent. Therefore, we need to choose the appropriate hardware configuration according to our needs and budget.

1. Processor

Currently, due to the emergence of frameworks like Llama.cpp, language models can be directly inferred or trained on CPUs. So if you don't have a standalone graphics card, don't worry, the CPU is also an option for you.

At present, the main processor brands on the market are Intel and AMD. Intel's Xeon series and AMD's EPYC series are currently the most popular server-level processors. These processors have high frequency, multi-core, and large cache, which are very suitable for running large language models (LLMs).

When choosing a processor, we need to consider its number of cores, frequency, and cache size. Generally speaking, processors with more cores, higher frequency, and larger cache perform better, but they are also more expensive. Therefore, we need to choose according to our own budget and needs.

For local deployment of LLMs, we recommend the following hardware configuration:

CPU: At least 4 cores with a frequency of at least 2.5GHz

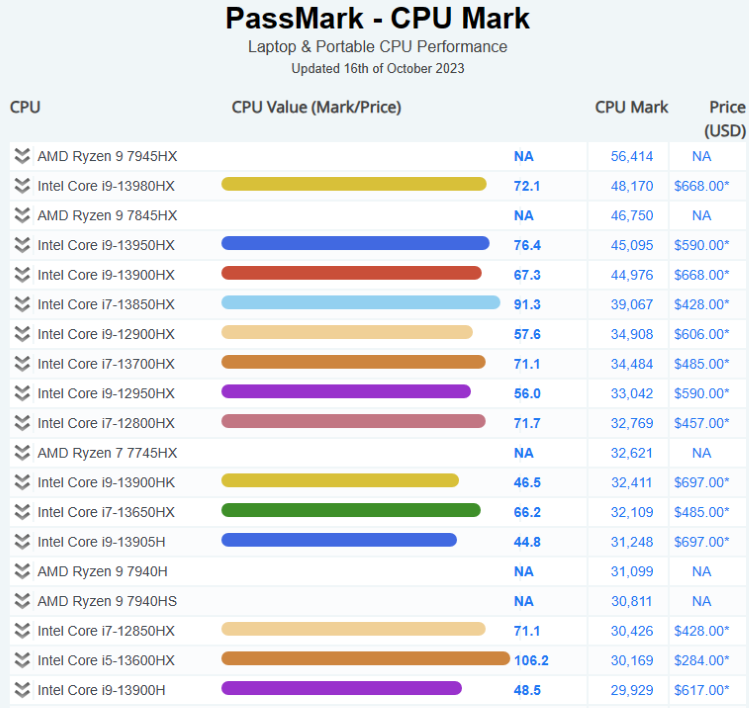

The following is the current CPU market situation for reference:

You can find more information in here: Pass Mark CPU Benchmarks

2. Graphics Card

For running large language models (LLMs), graphics cards are very important. The computing power and memory size of the graphics card directly affect the training and inference speed of the model. Generally speaking, graphics cards with stronger computing power and larger memory perform better, but they are also more expensive.

NVIDIA's Tesla and Quadro series as well as AMD's Radeon Pro series are currently the most popular professional graphics cards. These graphics cards have a large amount of memory and powerful parallel computing capabilities that can effectively accelerate model training and inference.

For local deployment of LLMs, we recommend the following hardware configuration:

Graphics card with 8G or more memory.

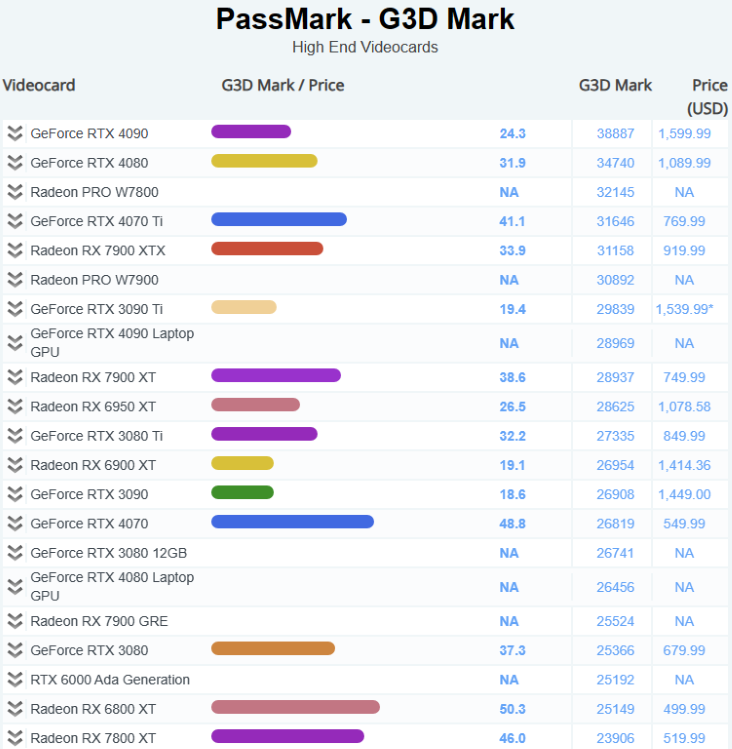

The following is the current GPU market situation for reference:

You can find more information in here: Pass Mark Software - Video Card (GPU) Benchmarks

3. Memory

DDR5 has become mainstream. For running large language models (LLMs), we recommend using as much memory as possible.

For local deployment of LLMs, we recommend the following hardware configuration:

At least 16GB of DDR4 memory.

4. Storage

The speed and capacity of storage devices directly affect data reading speed and data storage capacity. Generally speaking, storage devices with faster speed and larger capacity perform better but are also more expensive.

Solid State Drives (SSDs) have become mainstream storage devices due to their high speed and low latency. NVMe interface SSDs are gradually replacing SATA interface SSDs due to their higher speed. Solid State Drives (SSDs) have become mainstream storage devices due to their high speed and low latency.

For local deployment of LLMs, we recommend the following hardware configuration:

At least 1TB NVMe SSD.

Software Environment Configuration

1. Building and Analysis of Different Operating System Environments

When deploying LLMs locally, we need to consider the choice of operating system environment. Currently, the most common operating system environments include Windows, Linux, and MacOS. Each operating system has its own advantages and disadvantages, and we need to choose according to our own needs.

- Windows:

The Windows operating system is loved by a large number of users because of its user-friendly interface and rich software support. However, due to its closed-source nature, Windows may not be as good as Linux and MacOS in some advanced features and customization.

- Linux:

The Linux operating system is known for its open source, highly customizable, and powerful command line tools. For users who need to perform large-scale computing or server deployment, Linux is a good choice. However, due to its steep learning curve, it may be a bit difficult for beginners.

- MacOS:

The MacOS operating system is loved by many professional users for its elegant design and excellent performance. However, due to its hardware restrictions, MacOS may not be suitable for users who need to perform large-scale computing.

- Windows + WSL:

WSL allows us to run a Linux environment on the Windows operating system, which allows us to enjoy the user-friendly interface of Windows while using the powerful command line tools of Linux.

The above is our simple analysis of different operating system environments. We strongly recommend using Windows + WSL, which can take care of daily use and serve our professional computing.

2. Related Software Configuration

After choosing the appropriate hardware configuration, we need to install and configure some necessary software on the operating system. These softwires include the operating system, programming language environment, development tools, etc. Here are some common software configuration steps:

- Operating System:

First, we need to install an operating system. For training and inference of large language models (LLMs), we recommend using the Linux operating system because it provides powerful command line tools and a highly customizable environment.

- Programming Language Environment:

We need to install the Python environment because most LLMs are developed using Python. We recommend using Anaconda to manage the Python environment because it can easily install and manage Python packages.

- Development Tools:

We need to install some development tools such as text editors (such as VS Code or Sublime Text), version control tools (such as Git), etc.

- LLMs Related Software:

We need to install some software related to LLMs such as TensorFlow or PyTorch deep learning frameworks, as well as Hugging Face's Transformers library etc.

3. Recommended Configuration

Here are some recommended software tools and versions:

- Operating System:

We recommend using Windows11+Ubuntu 20.04 LTS (WSL). This is a stable and widely used Linux distribution with a rich package and good community support.

- Python Environment:

We recommend using Anaconda to manage the Python environment. Anaconda is a popular Python data science platform that can easily install and manage Python packages. We recommend using Python 3.10 because it is a stable and widely supported version.

- Deep Learning Framework:

We recommend using PyTorch 2.0 or above which is one of the most popular deep learning.

Cloud computing platform

1. Platform

Here are some of the most popular ones:

1). Amazon Web Services (AWS)

Amazon Web Services (AWS) is a cloud computing platform provided by Amazon, Inc., with data centers around the world offering more than 200 full-featured services. AWS' services span infrastructure technologies such as compute, storage, and databases, as well as emerging technologies such as machine learning, artificial intelligence, data lakes and analytics, and the Internet of Things. AWS' customers include millions of active users and organizations of all sizes and industries, including startups, large enterprises, and government agencies.

2). Microsoft Azure

Microsoft Azure is a cloud computing platform provided by Microsoft that has the following advantages. Azure has multiple layers of security measures that cover the data center, infrastructure, and operations layers to protect customer and organizational data. Azure also offers the most comprehensive compliance coverage, supporting more than 90 compliance products and industry-leading service-level agreements. Azure enables seamless operation across on-premises, multiple clouds, and edge environments, with tools and services designed for hybrid clouds, such as Azure Arc and Azure Stack1. Azure also allows customers to use their own Windows Server and SQL Server licenses and save up to 40% on Azure. Azure supports all languages and frameworks, allowing customers to build, deploy, and manage applications in the language or platform of their choice. Azure also provides a wealth of AI and machine learning services and tools that allow customers to create intelligent applications and leverage OpenAI Whisper models for high-quality transcription and translation. Azure offers more than 200 services and tools that allow customers to achieve unlimited scale of applications and take advantage of innovations in the cloud. Azure has rolled out more than 1,000 new features in the last 12 months, covering areas such as AI, machine learning, virtualization, Kubernetes, and databases.

3). Google Cloud Platform (GCP)

Google Cloud Platform (GCP) is a suite of cloud computing services and tools offered by Google. GCP allows customers to build, deploy, and scale applications, websites, and services on the same infrastructure as Google. GCP provides various technologies such as compute, storage, databases, analytics, machine learning, and Internet of Things. GCP is used by millions of customers across different industries and sectors, including startups, enterprises, and government agencies. GCP is also one of the most secure, flexible, and reliable cloud computing environments available today.

2. Advantages of Cloud Computing

- Cost-Effective: Cloud computing is more cost-effective compared to traditional IT infrastructure, as users only pay for the computing resources they use.

- Managed Infrastructure: The cloud provider manages the underlying infrastructure, including hardware and software.

- Scalability: The cloud is eminently scalable, allowing organizations to easily adjust their resource usage based on their needs.

- Global and Accessible: The cloud is global, convenient, and accessible, accelerating the time to create and deploy software applications.

- Unlimited Storage Capacity: No matter what cloud you use, you can buy all the storage you could ever need.

- Automated Backup/Restore: Cloud backup is a service in which the data and applications on a business's servers are backed up and stored on a remote server.

3. Disadvantages of Cloud Computing

- Data Security: While cloud providers implement robust security measures, storing sensitive data in the cloud can still pose risks.

- Compliance Regulations: Depending on your industry, there may be regulations that limit what data can be stored in the cloud.

- Potential for Outages: While rare, outages can occur with cloud services, leading to potential downtime.

Conclusion

Local deployment of LLMs and fine-tuning with personalized data is a complex but very valuable task, and the correct hardware configuration and software environment configuration are key to achieving this goal.

In terms of hardware configuration, we need to choose the appropriate CPU, GPU, memory, and storage devices according to our own budget and needs. In terms of software environment configuration, we need to choose the appropriate programming language interpreter, deep learning framework, and other necessary libraries according to our own operating system.

Although these tasks may encounter some challenges, as long as we have clear goals and are willing to invest time and energy to learn and practice, we will definitely succeed.

I hope this article can provide you with some insights. In the next article, we will give an example of environment deployment.

Stay tuned!

Learn how to deal with date and time in MQL5

Learn how to deal with date and time in MQL5

Launching MetaTrader VPS: A step-by-step guide for first-time users

Launching MetaTrader VPS: A step-by-step guide for first-time users

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

On hardware and OS only general words, benchmark of desktop vids, but mobile processors, abstract, not applicable to the task.

It feels like the article was generated by an AI.

I wonder if LLM can be converted to ONNX and how much it would weigh :)

it seems possible

RWKV-4 weighs less than a gig.

homepage