Population optimization algorithms: Binary Genetic Algorithm (BGA). Part I

Contents

1. Introduction

2. Methods of presenting features: real and binary

3. Gray binary code representation advantage

4. Selection methods

5."Crossover" method types

6. "Mutation" method types

7. Summary

1. Introduction

In this article, we will take a closer look at the methods and techniques used in genetic algorithms, which can serve as tools and building blocks for creating various optimization algorithms in custom solutions. The purpose of this article is to describe and provide tools for studying optimization problems in any optimization algorithms, not just genetic ones.

2. Methods of presenting features: real and binary

The parameters of optimization problems are often called "features" and must be represented in a certain way to be used in the logic of the optimization algorithm. In genetics, these characteristics are divided into phenotype and genotype. The phenotype is the appearance of the parameter being optimized, and the genotype is the way it is represented in the algorithm. In most optimization algorithms, the phenotype is the same as the genotype and is represented as a real number. A gene is an optimized parameter, in turn, a chromosome is a set of genes, i.e. a set of optimized parameters.

Real data representation is used to represent fractional numbers. Real numbers can have a decimal part and a fractional part, separated by a decimal point. For example, "3.14" and "0.5" are real numbers.

Binary representation of data, on the other hand, uses the binary number system, in which numbers are represented using two symbols: "0" and "1" and each digit is called a bit (binary digit). For example, the number "5" can be represented in binary form as "101".

The main difference between real and binary representation of data is the way the numbers are encoded. Real numbers are usually encoded using standards such as IEEE 754, which defines formats for representing floating point numbers. In the MQL5 language, the "double" data type is used for real numbers. It can describe a total of 16 significant digits in a number. This means that the total number of digits cannot exceed sixteen, for example, "9 999 999 999 999 999.0" and "9 999 999.999 999 99" and "0.999 999 999 999 999 9" have sixteen "9" digits in total before and after the decimal point. I will explain why this is important a bit later.

Real numbers are convenient for use in writing programs and in everyday life, while binary numbers are used in computing systems and when performing low-level operations, including logical and bitwise ones.

In the context of optimization algorithms, including genetic algorithms, data can be represented as real or binary numbers, which are used to encode decisions and perform operations on them.

The advantage of using real numbers in optimization algorithms is the ability to work with continuous parameter values. The real representation allows us to use numbers to encode features and perform solution search operations directly with these numbers. For example, if the solution is a vector with numeric values, then each element of the vector can be encoded with a real number.

However, the real representation has disadvantages. Individual elements of an optimization problem can be independent and represent multidimensional non-overlapping spaces. This creates difficulties when localizing a global solution, since the search may be difficult due to the division of space into independent subspaces.

The advantage of binary representation of features lies in the ability to combine all the features into one whole description of multidimensional non-overlapping spaces. This allows individual multidimensional spaces to be linked into a common search space. The binary representation also makes it easier to perform elementary bitwise operations such as the "mutation" operator, unlike real numbers, where additional time-consuming operations are required to perform such operations.

The disadvantages of the binary representation in the optimization algorithm are the need to convert into real numbers, which the optimization task ultimately operates on, as well as additional time-consuming operations, such as storing the bit representation in the form of a significant array length.

Thus, real numbers provide the flexibility to work with continuous values, while the binary representation allows combining features into a single whole and perform bitwise operations on them more efficiently. Optimization algorithms, in addition to genetic ones, may well use both representation methods to obtain the advantages of both.

3. Gray binary code representation advantage

Despite all the advantages of the binary representation, it has a significant drawback: two nearby decimal numbers in the binary representation can differ by several bits at once. For example, in a binary code, moving from 7 (0111) to 8 (1000) changes all four bits. This means that a different number of bit operations between different close numbers is required, which leads to an uneven probability of outcomes in the search space, the emergence of peculiar "bands of increased probability" and, conversely, "blind spots" in the optimized parameters.

For example, if we want to perform an arithmetic operation on two numbers that differ by only one unit in decimal notation, in binary notation it may require changing several bits. As a result, the probability of getting the desired number after the operation will be uneven, unlike another pair of numbers, since changing each bit affects the final result. This phenomenon can be especially problematic when performing floating point calculations, where the precision of the number representation is of great importance and small changes in the decimal representation of the numbers can lead to large changes in their binary representation and, consequently, in the results of the calculation.

In the Gray code binary representation (also known as reflective binary code), each number is represented as a set of bits, but each subsequent number differs from the previous one by only one modified bit. This ensures a smooth transition sequence of numbers, a property called the "unit distance property".

Previously, I mentioned that the "double" number can only be represented by 16 significant digits. Let's look at an example to better understand what impact this may have.

Suppose that we have a number with 15 significant "0" and "1" in the 16th digit: "0.0000000000000001". Now let's add 1.0 to this number and get "1.0000000000000000". Note that "1" in the 16 th digit has disappeared, leaving us with 15 significant digits after the decimal point. When a new digit is added to the integer portion of a "double", the significant digits are shifted to the left. Thus, if we have a non-zero integer part, we cannot guarantee precision to the 16 th decimal place.

In the binary representation and, in particular, when using the Gray code, we can avoid the problem of loss of precision in digits if we set such a goal or if it is required within the framework of a specific task. Let's explore this aspect in greater detail.

Computers work with programs and numbers in binary representation because this is the most efficient and natural way for machine processing of information. However, for ease of understanding and writing optimization algorithms at the top level, we will need some additional effort to work with numbers in binary form, especially in the case of negative numbers and numbers with a fractional part.

To work with numbers in binary form at the highest level of programming, there are various libraries and tools that make it easier to process and represent negative numbers and numbers with a fractional part. But we will implement everything as part of optimization algorithm in MQL5.

The additional code method is used to represent negative numbers in the binary system. It allows us to display a negative number by inverting all the bits of the number and adding one to the resulting result. But we will use a little trick and avoid additional operations for working with negative numbers by simply getting rid of them. Let's assume that one of the optimized parameters has boundaries:

min = -156.675 and max = 456.6789

then the distance between "max" and "min" is:

distance = max - min = 456.6789 - (-156.675) = 613.3539

Thus, the required number in the optimization problem will always be positive. It will be located to the right of 0.0 on the number line and have a maximum value equal to 613.3539. Now the task is to ensure the encoding of a real number. To do this, we will split this number into integer and fractional parts. The whole part will be represented in Gray code as follows (the representation method is indicated in brackets):

613 (decimal) = 1 1 0 1 0 1 0 1 1 1 (binary Gray)

Then the fractional part will look like this:

3539 (decimal) = 1 0 1 1 0 0 1 1 1 0 1 0 (binary Gray)

We can store the integer and fractional parts in one string, while remembering the number of characters used for both parts, which allows us to easily carry out the reverse conversion. As a result, the real number will look like this (the ":" sign conditionally separates the integer and fractional parts):

613.3539 (decimal) = 1 1 0 1 0 1 0 1 1 1 : 1 0 1 1 0 0 1 1 1 0 1 0 (binary Gray)

Thus, we can convert any real number within 16 decimal places in its integer part and 16 decimal places for double type numbers. Moreover, this allows us to have more than 16 significant digits in the total number of digits, even up to 32 significant digits or more (limited by the length of the ulong number used in functions for converting integer decimal numbers to Gray code and vice versa).

To ensure that the fractional part is accurate to the 16th digit, we will need to convert the number "9,999,999,999,999,999" (the maximum possible fractional number):

9999999999999999 (decimal) = 1 1 0 0 1 0 0 1 0 0 0 1 0 1 1 0 0 0 1 0 1 1 0 1 0 1 1 0 0 0 0 0 1 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 (binary Gray)

Then the final record of our number 613.9999999999999999 (having 16 decimal places) will look like this:

613.9999999999999999 (decimal) = 1 1 0 1 0 1 0 1 1 1 : 1 1 0 0 1 0 0 1 0 0 0 1 0 1 1 0 0 0 1 0 1 1 0 1 0 1 1 0 0 0 0 0 1 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 (binary Gray)

As we can see, the user can set any precision after the decimal point within the ulong type length.

Let's look at functions for working with converting decimal integers to Gray code and vice versa. For Gray code encoding and decoding, additional functions are used to convert to regular binary code.

The DecimalToGray function takes the "decimalNumber" and the link to the "array" the conversion result will be stored in as parameters. It converts a number from decimal to Gray code. To do this, it first calculates the "grayCode" value using XOR bitwise operation between "decimalNumber" and shifting it one bit to the right. It then calls the IntegerToBinary function to convert the resulting "grayCode" value into a binary representation and store it in the "array". The most saving storage of integers by char type is used for the array.

The IntegerToBinary function accepts the "number" and the link to the "array" the conversion result will be stored in as parameters. It converts a number from the decimal number system to the binary one. In the loop, it gets the remainder of "number" divided by 2 and adds it to the "array". It then divides "number" by 2 and increments the "cnt" counter. The loop continues as long as "number" is greater than zero. After the loop, the "array" is reversed to get the correct bit order.

The GrayToDecimal function accepts the link to the "grayCode" array, the "startInd" initial index and the "endInd" end index as parameters. It converts a number from Gray code to decimal number system. It first calls the BinaryToInteger function to convert the "grayCode" array into a binary representation and store it in the "grayCodeS" variable. It then initializes the "result" variable with the "grayCodeS" value. It then executes the loop where it shifts "grayCodeS" one bit to the right and applies the bitwise XOR operation with "result". The loop continues as long as "grayCodeS" shifts to the right and is greater than zero. At the end, the function returns the "result" value.

The BinaryToInteger function accepts the link to the "binaryStr" array, the "startInd" initial index and "endInd" end index as parameters. It converts a number from the binary number system to the decimal one. The function initializes the "result" variable using zero. It then executes a loop where it shifts "result" one bit to the left and adds the value of "binaryStr[i]" (bit) to "result". The loop continues from "startInd" to "endInd". At the end, the function returns the "result" value.

Note that the indices of the initial and final position are used when converting back from the Gray code. This allows extracting only a certain number of optimized parameters from the "row" where all the optimized parameters are stored. We know the total length of the string, including the integer and fractional parts, and therefore we can determine the specific position of the numbers in the general string of the chromosome, including the junction of the integer and fractional parts.

//—————————————————————————————————————————————————————————————————————————————— //Converting a decimal number to a Gray code void DecimalToGray (ulong decimalNumber, char &array []) { ulong grayCode = decimalNumber ^(decimalNumber >> 1); IntegerToBinary(grayCode, array); } //Converting a decimal number to a binary number void IntegerToBinary (ulong number, char &array []) { ArrayResize(array, 0); ulong temp; int cnt = 0; while (number > 0) { ArrayResize (array, cnt + 1); temp = number % 2; array [cnt] = (char)temp; number = number / 2; cnt++; } ArrayReverse (array, 0, WHOLE_ARRAY); } //—————————————————————————————————————————————————————————————————————————————— //—————————————————————————————————————————————————————————————————————————————— //Converting from Gray's code to a decimal number ulong GrayToDecimal (const char &grayCode [], int startInd, int endInd) { ulong grayCodeS = BinaryToInteger(grayCode, startInd, endInd); ulong result = grayCodeS; while ((grayCodeS >>= 1) > 0) { result ^= grayCodeS; } return result; } //Converting a binary string to a decimal number ulong BinaryToInteger (const char &binaryStr [], const int startInd, const int endInd) { ulong result = 0; if (startInd == endInd) return 0; for (int i = startInd; i <= endInd; i++) { result = (result << 1) + binaryStr [i]; } return result; } //——————————————————————————————————————————————————————————————————————————————

4. Selection methods

In optimization algorithms, selection is the process of selecting the best solutions from a population or a set of candidates to create the next generation. It plays a critical role in optimization methods. Choosing a specific selection option affects not only the search capabilities of the algorithm, but also the speed of its convergence. All selection methods have their advantages and disadvantages and may be more effective in some algorithms and less effective in others. The use of each of them depends on the specific search strategy.

There are several main types of selection used to select parent individuals and form a new generation. Let's consider each of them in detail:

- Uniform Selection is a method of parent selection in which each individual has an equal chance of being selected. In this method, each individual in a population has the same probability of being selected for reproduction. The advantage of equal selection is that it ensures that all individuals have an equal chance of being selected. However, this method does not take into account the fitness of individuals, so it may be less effective in finding the optimal solution, especially in the case where some individuals have significantly higher fitness than others. Uniform selection can be useful in some cases, such as when you want to explore different regions of the solution space or maintain a high degree of diversity in a population.

- In the case of Rank Selection, individuals are ranked according to their fitness, and the probability of selecting an individual depends on its rank. The higher the rank of an individual, the more likely it is to be selected. Rank selection can be fully proportional, where the probability of choosing an individual is proportional to its rank (ordinal number), or partially proportional, where the probability of choosing an individual increases with increasing rank according to a nonlinear law. Rank selection has a number of advantages. First, it helps maintain diversity in the population, since less fit individuals also have a chance of being selected. Second, it avoids the problem of premature convergence, where the population converges too quickly to a local optimum. Rank selection helps preserve diversity and explore a wider solution space. Rank selection is similar to uniform selection, but places greater emphasis on selecting the fittest individuals, leaving the chance for the less fit to be selected.

- In Tournament Selection, a small subset of individuals is randomly selected (called a tournament). From this subset, the individual with the highest fitness is selected. The size of the tournament determines how many individuals will participate in each tournament. Tournament selection allows for the preservation of diversity in the population, since even less fit individuals can be selected due to chance. Tournament selection has several advantages. First, it allows randomness to be taken into account in parental selection, which promotes offspring diversity. Second, it allows parents to be selected from any area of the population, including both the best and the less fit individuals. Tournament selection is one of the main parent selection methods in evolutionary algorithms and is often used in combination with other operators such as crossover and mutation to create new offspring and continue the evolution of a population.

- In Roulette Wheel Selection, each individual is represented on an imaginary roulette wheel in proportion to its fitness. The roulette wheel "spins" and the individual the "pointer" points to is selected. The higher the fitness of an individual, the larger the sector of the roulette wheel it occupies, and the greater the chance of being selected. Roulette selection allows parents to be selected with probabilities proportional to their fitness. Individuals with higher fitness have a greater chance of being selected, but even less fit individuals have a non-zero probability of being selected. This allows preserving genetic diversity in the population and exploring different regions of the solution space. However, roulette wheel selection may have a problem with premature convergence, especially if the fitness of individuals varies greatly. Individuals with high fitness will have a much greater chance of being selected, which can lead to a loss of genetic diversity, "clogging" the population with similar solutions and getting stuck in local optima.

The "elitism" method can be used in conjunction with any selection method as an additional measure. It consists of preserving the best individuals from the current population and passing them on to the next generation without changes. The use of the "elitism" method allows the best individuals to be preserved from generation to generation, which helps maintain high fitness and prevent the loss of useful information. This helps speed up the convergence of the algorithm and improve the quality of the solution found.

However, it should be taken into account that the use of "elitism" can lead to a loss of genetic diversity, especially if elitism is set at a high level. Therefore, it is important to choose an appropriate elitism value to maintain a balance between preserving the best individuals and exploring the solution space.

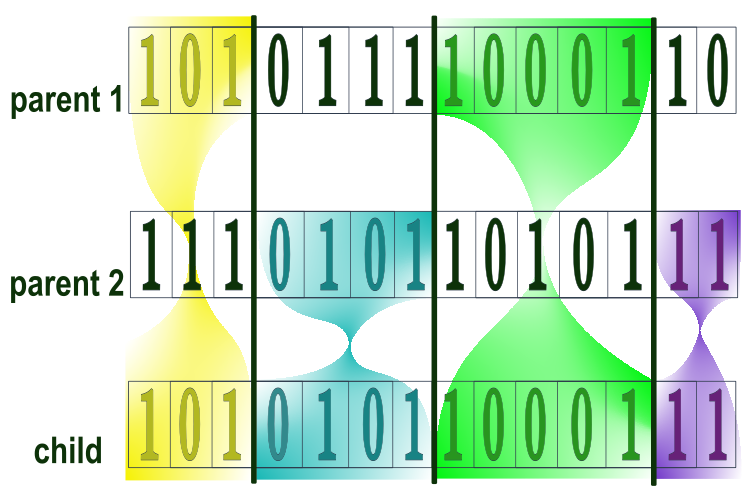

5. "Crossover" method types

Crossover is one of the main important operations in genetic algorithms, which imitates cross-breeding in natural evolution. It consists of combining the genetic information of two parent individuals to create offspring and transfer hereditary characteristics to daughter individuals. Crossover makes sense not only as a means of transmitting information, but also has a huge impact on the combinatorial qualities of the algorithm.

Crossover works at the genotype level and allows combining the genes of parent individuals to create new offspring.

The general principle of the crossover method is as follows:

- Selecting parent individuals: Two or several individuals are selected from a population to be interbred.

- Determining the crossover point : The crossover point determines the location where the parents' chromosomes will be separated to create offspring.

- Offspring creation: The chromosomes of the parents are separated at the point of crossover, and parts of the chromosomes of both parents are combined to create a new genotype of the offspring. Crossover results in one or more offspring that may have a combination of genes from both parents.

Figure 1. The general principle of the crossover method

Let's list the main types of binary crossover:

- Single-Point Crossover - two chromosomes separate at a random point and two offspring are produced by exchanging segments of the parents' chromosomes after that point.

- Multi-Point Crossover - similar to the single-point one but with multiple breakpoints, between which chromosome segments are exchanged.

- Uniform Crossover - each child bit is selected independently of one of its parents with equal probability.

- Cycle Crossover - defines cycles of positions that will be exchanged between parents, preserving the uniqueness of the elements.

- PMX (Partially Mapped Crossover) - preserves the relative order and position of elements from the parent chromosomes, used in problems where order is important, such as the traveling salesman problem.

- ОX (Order Crossover) - create a descendant by preserving the order of one parent's genes and inheriting the missing genes from the other parent in the order they appear.

Crossovers are used not only for binary representation, but also for real one. The main types of real crossovers include:

- Blend Crossover (BLX-α) - creates children whose values are chosen randomly within the range defined by the values of the parents and the "α" parameter, which extends that range.

- Symmetrical blend crossover (SBX) - uses different probability distributions to generate children whose values lie between the values of the parents, taking into account the degree of difference between the parents.

- Real-Coded Crossover - applies arithmetic operations to real numbers, such as by averaging or weighted summing the parent values.

- Differential Crossover - used for example in differential evolution, where a new vector is generated by adding the weighted difference between two individuals to the third one.

- Interpolation Crossover - creates children by interpolating between parent values, which may involve linear or nonlinear interpolation.

- Normal Distribution Crossover - children are generated using a normal distribution around the values of the parents, allowing the solution space to be explored around the parent points.

6. "Mutation" method types

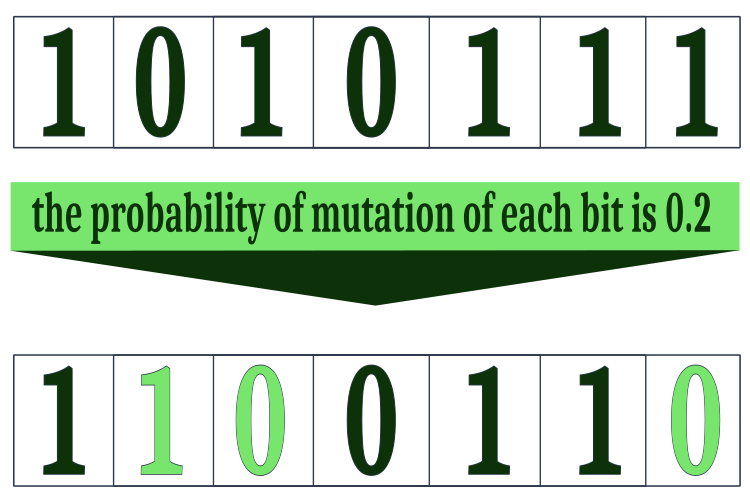

The mutation operator is one of the main operations in genetic algorithms and is used to introduce random changes to the genetic information of individuals in a population. It helps exploring new solutions and avoid getting stuck in local optima.

In biology, mutation is extremely rare and usually has a limited effect on the offspring. The number of individuals of each species in nature amounts to millions, so the genetic material of the population is very diverse, unless, of course, we are talking about endangered species (there is even the term "bottleneck" - the minimum number of individuals, below which a species goes extinct). Unlike living nature, in most implementations of genetic algorithms, mutations are also a rare phenomenon, at approximately 1-2%. This is especially important in genetic algorithms, where population sizes are typically small and inbreeding can be a problem, so evolutionary dead ends are more common than in biological evolution. In biology, we usually talk about populations consisting of millions of individuals, while in genetic algorithms (and optimization algorithms in general), we are dealing with only tens or hundreds of individuals and mutation is the only way to add new information to the gene pool of the population.

Thus, if some genetic information is missing in the population, a mutation provides an opportunity to introduce it.

So, mutation plays several important roles:

- Researching new solutions - this allows the algorithm to explore new potential solutions to the problem that may be outside the solution space explored by crossover. Mutation allows the algorithm to "jump" through the solution space and discover new options.

- Maintaining genetic diversity - without mutation, the algorithm may encounter the problem of converging to a local optimum, where all individuals have similar genetic information. Mutation allows for random changes, which helps avoid premature convergence and maintain diversity in the population.

- Overcoming evolutionary dead ends - during the evolution, genetic algorithms can sometimes get stuck in evolutionary dead ends, where the solution space is exhausted and a better solution cannot be reached. Mutation can help overcome these dead ends by introducing random changes that can open up new possibilities and allow the algorithm to move forward.

Too high a mutation probability can cause the algorithm to degenerate into a random search, and too low a probability can lead to convergence problems and loss of diversity.

The following types of mutation methods can be distinguished for algorithms with binary representation:

- Single-point mutation - the value of one randomly selected bit in the binary string is inverted. For example, if we have the string "101010", then a single-point mutation can change it to "100010".

- Multi-point mutation - several random positions in a binary string are selected and the values at these positions are inverted. For example, if we have the string "101010", then a multi-point mutation can change it to "100000" or "001010".

- Probability-based (stochastic) mutation - each bit has a certain probability of changing when mutated.

- Complete inversion - a complete inversion of the bits in the binary string. For example, if we have the string "101010", then inversion changes it to "010101".

- Point inversion - a break point in the genetic sequence is randomly selected, the chromosome is divided into two parts at this point and the parts are swapped.

Figure 2. Probability-based mutation

The following types of mutations are distinguished in optimization algorithms with real data representation. I will not dwell on them in detail:

- Random Mutation Operator.

- Gaussian Mutation Operator.

- Arithmetic real number creep operator.

- Geometrical real number creep operator.

- Power mutation operator.

- Michalewicz's non-uniform operator.

- Dynamic mutation operator.

In general, in all optimization algorithms, any operation aimed at changing the components of the search space can be called a mutation if there is no exchange of information between agents (individuals). They are often very specific according to a certain search strategy of the algorithm.

7. Summary

This is the first part of the article on the "Binary Genetic Algorithm", in which we looked at almost all the methods used not only in this algorithm, but also in other population optimization algorithms, even if they do not use the terms "selection", "crossover" and "mutation". By studying and understanding these methods well, we will be able to approach optimization problems more consciously, and new ideas may arise for implementing and modifying known algorithms, as well as for creating new ones. We also looked at different ways of representing information in optimization algorithms, their advantages and disadvantages, which may lead to new ideas and fresh approaches.

Translated from Russian by MetaQuotes Ltd.

Original article: https://www.mql5.com/ru/articles/14053

Developing an MQL5 RL agent with RestAPI integration (Part 3): Creating automatic moves and test scripts in MQL5

Developing an MQL5 RL agent with RestAPI integration (Part 3): Creating automatic moves and test scripts in MQL5

MQL5 Wizard Techniques you should know (Part 19): Bayesian Inference

MQL5 Wizard Techniques you should know (Part 19): Bayesian Inference

Neural networks made easy (Part 69): Density-based support constraint for the behavioral policy (SPOT)

Neural networks made easy (Part 69): Density-based support constraint for the behavioral policy (SPOT)

Statistical Arbitrage with predictions

Statistical Arbitrage with predictions

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

That stabbed me.

Read. What is missing is a diagram showing the general representation of an optimisation algorithm.

For all optimisation algorithms without exception, not only for GA, the order of operators (methods) is always the same, in the order as in the Table of Contents:

1. Selection.

2. Crossover.

3. Mutation.

Each particular algo may be missing one or two operators, but the order is always so. This order can surely be logically justified and related to probabilities, and the goal of any optimisation algorithm is to add up a combination of probabilities in favour of solving the problem.

There's also a fourth method, the method of placing new individuals into a population, but it's not usually identified as a stand-alone method.

Maybe, yes, it makes sense to draw a diagram of the structure of an "optimisation algorithm", I'll think about it.

For all optimisation algorithms without exception, not only for GA, the order of operators (methods) is always the same

I couldn't figure out why some Moving algorithms have Moving without input, while others have it with input.