Self-organizing feature maps (Kohonen maps) - revisiting the subject

Mykola Demko | 17 May, 2016

Self-organizing feature maps (Kohonen maps) — revisiting the subjectIntroduction

This article is a continuation of a previously published article "Using Self-organizing feature maps (Kohonen maps) in MetaTrader 5". A large proportion of this material was revised and adapted for an easier application in side projects. In general, the article is aimed at helping beginners and experienced programmers to connect a neural network algorithm of Kohonen maps to their projects and to edit the main algorithm themselves. There is only one sample of patterns used in the article, but the emphasis is placed on the variety of examples of using the code.

Theory of Kohonen maps

A Self-Organizing Feature Map (SOM) is a one-layer network where every neuron is connected with all components of n-dimensional input vector (pattern). Input vector (pattern) — is a description of one of the objects subject to clasterization.

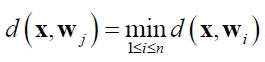

Training without a supervisor is exercised in the SOM. For training purposes competition mechanisms are applied. When sending a pattern network to input, the neuron with a vector that is the least different from the input pattern wins. The following ratio applies for the winner neuron:

where:

- n — amount of neurons,

- j — number of winner neuron,

- d(x,w) — distance between x and w vectors.

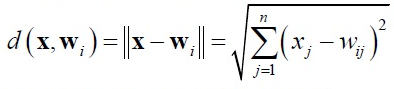

Euclidean space is most frequently used as distance.

In this implementation, the search of the winner neuron is performed in the BestMatchingNode function of the CSOM_Net_Base class.

Neighborhood and radius of learning are formed around the winner neuron. A radius of learning defines which neurons are subject to training at this iteration. It is at its maximum in the beginning of training and is decreased with a growth of training iterations, so that only the winner neuron falls into a radius of learning at the final stage.

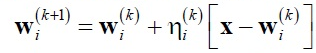

Weights of neurons within the radius of learning are adapted to the Kohonen rule:

where:

- x — input pattern,

- k — number of training iteration,

- ni(k) — training ratio of i-th neuron from the radius of learning in the k-th training iteration.

Weights of neurons outside thed radius of learning are not being adapted. In this implementation, weights are adapted in the AdjustWeights function of the CSOMNode class.

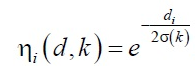

The ni(k) training speed ratio is split into two parts:

- ni (d,k) neighborhood function

- and a(k) training speed function

where A and B are selected constants.

This function is inversely proportional to the training loop number, therefore, in the initial stage, training has a high speed and a long radius, patterns are averaged. At the end of training, weights are finally adjusted to the input parameters.

In general, the adaptation dynamics of a specific neuron can be presented as a descent of gradient:

When training the Kohonen network, a problem of so called "dead neurons" occurs. Neurons that have initial weight coefficients far away from the input patterns can never win a competition despite the length of training. In this implementation, the system problem of the Kohonen training algorithm was solved with two revisions.

First of all, the function of selecting random patterns from a training set was revised. The C_PRNG_UD class is used instead of rand()/N that has no guarantee that all values will appear for N iterations. It guarantees equally distributed random pattern selection. Second of all, weights of neurons are initialized with random values, but only from the range encountered in patterns.

This way, even if we come across neurons that never win a competition — "dead neurons", then, at least, they will stay in the winner's neighborhood and will be subject to training, playing the role as a bridge between patterns during clasterization.

Implementation of Kohonen network with examples of use

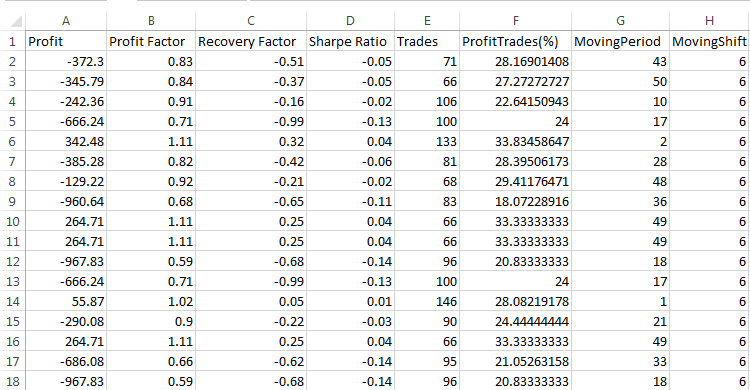

We will now explain a software implementation of Kohonen maps. The actual implementation occurs based on two measures: dimension of patterns (m_dimension) and total of nodes (m_total_nodes). However, visually cards are presented as three-dimensional, where m_total_nodes is folded into the m_xcells * m_ycells rectangle. Therefore, nodes store information about both weights and location.

class CSOMNode { protected: //--- coordinates of node zone int m_x1; int m_y1; int m_x2; int m_y2; //--- coordinates of node center double m_x; double m_y; //--- node weights double m_weights[]; ...

The CSOMNode class is compositionally included into the CSOM_Net_Base basic class in the form of a one-dimensional array of examples of the CSOMNode m_som_nodes[] class. This is the actual Kohonen network. Other functions simply service training and network exploitation.

The previous article emphasized the diversity of pattern samples and demonstrated the graphical diversity of the interface. In this article, only one example of patterns but in five variations of connectivity is considered. I have revised the source code to enable easy connection to side projects. The implementation is broken down into five classes.

class CSOM_Net_Base class CSOM_Net_Data : public CSOM_Net_Base class CSOM_Net_Train : public CSOM_Net_Data class CSOM_Net_Img : public CSOM_Net_Train class CSOM_Net_Demo : public CSOM_Net_Img

The declaration reveals the cascade connection of classes. This way, if there is no demand for some functions in a connected project, it is sufficient not to connect child classes, so all the following functions will be cut off.

Below is the list of public functions:

class CSOM_Net_Base { public: //--- public node of Kohonen network CSOMNode *public_node; //--- function sends the ind set node to the public_node public area void GetNode(int ind){ public_node=m_som_nodes[ind].GetObjPointer(); }; //--- function returns dimension of patterns int GetDimension(){return(m_dimension);}; //--- function for loading network from a file bool DownloadNet(string file_name); //--- function for finding the best node based on a specified vector with a mask int BestMatchingNode(const double &vector[]); //--- function for finding the best node based on a specified vector with cutting to the size of dimension int BestMatchingNode(const double &vector[],int dimension); //--- function for filling a bit mask for searching nodes that are similar to patterns (the mask determines which fields to search) bool InitSetByteMap(int num,bool value); }; class CSOM_Net_Data : public CSOM_Net_Base { public: //--- function for loading data for training from the specified file bool LoadPatternDataFromFile(string filename); //--- function for adding vector to the training set void AddVectorToPatternsSet(double &vector[],string title); //--- function for copying a pattern from the training set bool GetPatterns(int ind_pattern,double &vector[]); //--- function for returning a pattern title from the training set string GetTitlesPatterns(int ind_pattern); //--- function for returning the total of patterns int GetTotalsPatterns(); }; class CSOM_Net_Train : public CSOM_Net_Data { public: //--- function for initializing network, obtaining parameters void InitParameters(int iterations,int xcells,int ycells); //--- function for training network void Train(); //--- function for saving network into file void SaveNet(string file_name); //--- virtual functions (the body is described in the CSOM_Net_Img child) virtual void Render(){}; virtual void ShowBMP(bool back){}; }; class CSOM_Net_Img : public CSOM_Net_Train { public: //--- function for initializing network, obtaining parameters void InitParameters(int iterations,int xcells,int ycells, int bmpwidth,int bmpheight, bool p_HexagonalCell,bool p_ShowBorders,bool p_ShowTitles, int p_ColorScheme,int p_MaxPictures); //--- function for displaying a state of network void Render(); //--- function for displaying bmp image on the chart void ShowBMP(bool back); //--- function for saving a resource in a bmp file void SaveBMP(); //--- deinitialization function, removes BMP image from the chart void NetDeinit(); }; class CSOM_Net_Demonstration : public CSOM_Net_Img { public: //--- function - example of using a child to increase opportunities void ShowTrainPatterns(); };

The attached files contain five examples with options of using Kohonen maps. The examples are numbered in order of the class cascade. We will be analyzing examples one by one.

Network creation, basic class

Sample1_SOM_Net_Base shows how a trained network is loaded from the binary file indicated in the parameter (without extension):

input string SOM_Net="SOM\\SOM_Net";

The somnet extension is automatically inserted. This helps to prevent confusion so a file unsuitable for algorithm recognition is not loaded.

Network loading occurs in the DownloadNet(string file_name) function. Following a detailed examination of the function's body, the format of the somnet file becomes clear. The header consisting of three memory cells of the double type (like the rest of array obtained from the file) is written in the beginning of the file. The first field stores the dimension of patterns plus six. Six fields are needed for storing the node coordinates. But since six is a constant value, we can easily obtain the pattern dimension by deducting six from the first field of the array header. The second and third fields store variables m_xcells and m_ycells — sizes by X and Y respectively in the visual presentation of network. By multiplying them you can obtain the number of nodes in the network.

This is followed by obtaining network data vector by vector, until the entire network is loaded.

Let's proceed with the example. After the network is loaded, the vector[] pattern is filled with constants. Initialization with constants is provided as an example. The BestMatchingNode function finds the index of the most similar node. Then the node is sent to the public part for a safe access to the functions of the CSOMNode class.

We will focus more on the BestMatchingNode function. It has three ways of application. When calling the BestMatchingNode(const double &vector[]) overload without mask initialization, the search through all vector fields will be performed, because the function will initialize the mask with units itself, i.e. it will allow the search through all fields. If the initialization of the InitSetByteMap(int num,bool value) mask is called for each field in advance, then filtering by which field to search or not to search will switch on. The ability to search for a node based on incomplete information is achieved this way. If another BestMatchingNode(const double &vector[],int dimension) overload is used, then filtering will be in order up to the field under the number sent to the function by the dimension parameter.

Loading pattern data

The second sample Sample2_SOM_Net_Data shows a connection of the following functionality extension — the CSOM_Net_Data class. The class is declared as a child of CSOM_Net_Base, therefore it has all functions that a child has, plus its own functions. The class is created as an intro to the parser functionality for loading patterns. Patterns obtained with a parser can be further used for both loading training patterns and patterns for recognition.

A name of the file with patterns is set by the parameter:

input string DataFileName="SOM\\optim.csv";

Please bear in mind — the file name is indicated with extension, i.e. it is a full name of the file. The parser file is opened as a file with the FILE_CSV flag.

Then, the same functions as in the previous example are called: network loading, file loading, file parsing, recognition in a pattern loop and printing.

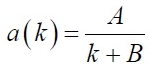

We should consider here a file format with patterns that the parser is set on.

The first line of the file should be filled with names of Title columns. This is an obligatory condition. If the file's header isn't filled, then the parser will cut the upper sample from the first line and use it to create column names.

Columns in the file are used for storing pattern data of the same depth. Accordingly, rows store separate patterns. In other words, vectors are located horizontally, and the same fields of all vectors — vertically.

The parser places pattern data in the built-in array m_patterns_sets_array and recognizes headers of fields, stores them in the m_som_titles array (declared in the descendant class), and also fills the m_patterns_titles array with row numbers. This way, there are no issues with finding a required pattern by its row in the file.

Network training

The third sample Sample3_SOM_Net_Train is the most fascinating. First, it has CSOM_Net_Train connected, which is the second basic class and the class for training Kohonen maps. Second, it is the last class sufficient for automated training without visualization, the subsequent classes only connect the graphical shell. So, everything described in the "Theory of Kohonen maps" section is implemented in the first three classes. Fourth, the class has an inheritance fork.

For subsequent classes, the functions of calculation and drawing a bmp image that displays training stages must be called in the network training function Train(). But since the third sample has no charts, bodies of these functions are not required here. In order to resolve this conflict, Render() and ShowBMP(bool back) functions are declared as virtual, have empty bodies, and the required code is defined in the CSOM_Net_Img child.

Now, we will move directly to training. The parameters should be sent to the network before training. For such purpose, there is a service function InitParameters(int iterations,int xcells,int ycells) where the parameters are transferred to: number of training iterations — iterations and the network size divided into two parameters — xcells, ycells (size by X and Y respectively).

The training loop is arranged in the Train() function. It is required to initialize network before calling the training loop. It implies to initialize the class of equally distributed figures, to define the array sizes, to calculate maximums and minimums using the pattern columns, to initialize nodes with random data from the set range.

The actual training iteration is specifically turned into a separate function TrainIterations(int &p_iter) for a better understanding of the code and easer access when finalizing. This implementation is written so other programmers could understand the essence, and make their own amendments, if necessary. The function contains the algorithm described in the section "Theory of Kohonen maps", hence there is no need to explain it once again.

In general, the third sample shows the consistency of connecting network from the CSOM_Net_Train class: loading a file with training patterns, sending parameters, calling a training, saving network in a file.

When describing the function of reading the network file, it was mentioned above that the functions of saving and reading should be simultaneously considered in order to understand the format.

void CSOM_Net_Train::SaveNet(string file_name) bool CSOM_Net_Base::DownloadNet(string file_name)

The double type prevails among the network data. Therefore, the network is saved into a one-dimensional array of the double type. The rest of data is converted to this type.

First, the header is saved in the network. These are five first double fields where necessary data about the network are saved.

- 6+dimension_node — length of pattern plus 6 (six additional fields in the node are required for storing coordinates).

- m_xcells — number of nodes horizontally.

- m_ycells — number of nodes vertically.

- m_xsize — bmp size horizontally.

- m_ysize — bmp size vertically.

Then, copying into the node network is performed. First, 6 datums about node coordinates, then — node weights. All data is copied this way node by node.

Towards the end, the line with Titles enumeration that is binary transfered to the double format is added to the network.

So, this is what we get. We know the pattern size and the number of nodes from the header. This data can be used to calculate where node data begins and ends. Titles networks are stored from the end point of nodes up to the end of the file. They are placed at the end of the file because it is impossible to predict the size of memory required.

Graphical shell

The fourth sample Sample4_SOM_Net_Img reveals the connection of a graphical shell. In the previous article, it was necessary to connect to the graphic library cintbmp.mqh written by Dmitry Fedoseev in order to call it. But I had to revise it to remove all WinAPI DLL calls. This will enable the use of code in the Market. The updated file contains information about the author, but I have changed the name to cintbmp2.mqh.

Only the functions of saving files into the Image directory, used for loading bmp files, were changed in the code. Now files are recorded only for saving, but not for displaying. For displaying purposes they are instantly loaded into resources which helps to avoid calling DLL libraries.

The sequence of calling functions is similar to the previous sample. Although now since graphical shell is connected, additional parameters of settings are required for its operation. Therefore, the initialization function declared in the previous child class was restarted to fit new parameters for graphical shell.

void InitParameters(int iterations,int xcells,int ycells, int bmpwidth,int bmpheight, bool p_HexagonalCell,bool p_ShowBorders,bool p_ShowTitles, int p_ColorScheme,int p_MaxPictures);

Parameters are passed through the function interface to prevent globally declared variables in the class code. Otherwise, it would complicate the process of connecting to side projects, where names of variables may differ.

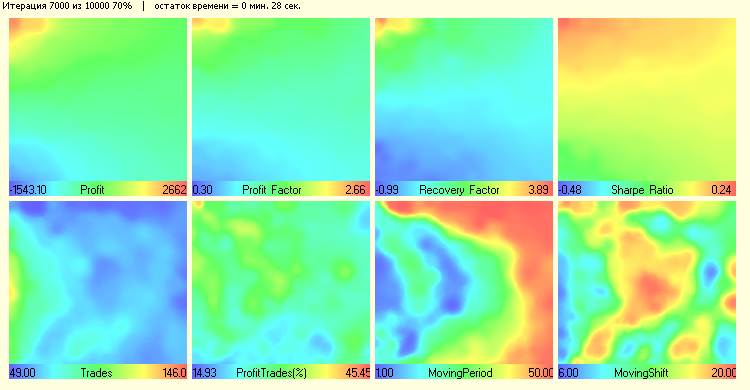

This is followed by calling the training function Train() from the sample of the CSOM_Net_Train class. But since there is a code to operate with charts in the bodies of functions Render() and ShowBMP(bool back), it leads to displaying the process of training on every one hundredth of iteration. After exiting from Train(), these functions are called to display the last changes.

Expanding functionality

The fifth sample Sample5_SOM_Net_Player displays the example of extending classes. It is not considered basic for the network, but it shows how easy an existing code can be reviewed. For this purpose, it is sufficient to declare the class as a child of one out of the basic classes.

Why should the child class be written? To make all functions of all classes hidden by the protected command that our class is inherited from available. For example, when connecting as a child of the CSOM_Net_Img class, we will get access to all functions and data declared as protected and public of all previous classes. This way, the program can be configured as you wish, depending on what needs to be obtained.

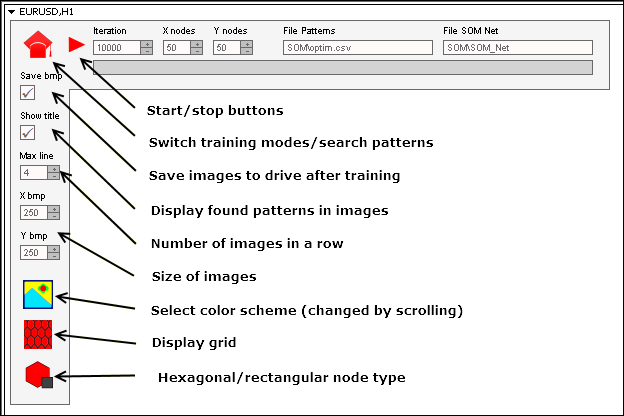

So, the extension of the CSOM_Net_ Player class is a graphic panel for controlling the Kohonen network. In order to write it, I have connected the IncGUI_v3.mqh file with the graphic library of Dmitry Fedoseev, after it was slightly modified. The improvement affected the display of graphic labels (not on the side, but on top of the objects) and color of labels. I haven't made any changes to the original file, I declared the library classes' inheritances instead.

Even though the creation of a graphic panel takes up most of the code, it doesn't pose any difficulties. It is a regular routine work for stamping the code. I want to elaborate further on coordination between the graphic panel with Kohonen neural network and the graphical shell.

First, it must be pointed out that the process of launching the network comprises two parts. The first part performs training and stores the trained network into the binary file somnet. The second part loads network from the file and searches for suitable nodes based on loaded patterns. Both parts are independent, although they use the same fields of displaying data: input field of a path towards the file with patterns, input field of a path towards the file with neural network.

However, modes are truly independent. And patterns for training are not obligatory taken from the same file as patterns for recognition. The same applies for the neural network. You can train network in one file, and then switch the mode into "operational" and load other network for recognition.

Important: switching modes resets the mode into a zero state.

So, modes are independent. But how was a quick response of the program for changing the state of buttons achieved? The problem is that the program doesn't use the loop for training or searching patterns. It is arranged based on events.

When the program enters the training mode, it initializes, loads parameters, prepares memory, and the first iteration, and then sends itself a user event under number 333. Entry to the event handler is filtered by clicking the mouse on the object, ending the object editing and the user event 333.

This way, if there was no interruption (if the state of "Start" and "Training" buttons hasn't changed, and we still have access for training entry), new training iteration occurs, and a new message 333 is sent. And so on, until the network is trained and it stops the loop, or a user uses buttons to stop the training.

The same interruption scheme is implemented in the mode of pattern search.

I have tried to make the panel interface as clear as possible. Mostly it repeats input variables from the previous samples. We will still show values of some buttons and input fields:

To launch the panel it is required to:

- Sort out files from the zip archive by directories.

- Files with patterns — to the directory MQL5\Files\SOM\.

- Files with bmp images of buttons — to the directory MQL5\Images\SOM\.

- Files with programs — to the directory MQL5\Projects\SOM\.

- Compile all mq5 files.

Conclusion

Forty years ago neural networks were at a leading edge of a scientific thought. About twenty years ago someone who was familiar with neural network algorithms was considered to be a unique specialist. Now, the term "neural network" doesn't seem to scare anybody. Algorithms of fuzzy logic, neural networks are now firmly ensconced into trading and, apparently, are not so complicated. I hope that this article will help you shed some light on Kohonen maps and work them into rotation.